A static screenshot will never express the freedom

of movement in an animated 3D scene. So we encourage

you to try the examples mentioned in this chapter yourself.

Download view3dscene, our X3D browser,

from http://castle-engine.sourceforge.net/view3dscene.php.

Then download our demo models from

http://castle-engine.sourceforge.net/demo_models.php.

You can now run view3dscene,

and open with it various models inside demo_models/compositing_shaders/

subdirectory. Also the water demos inside demo_models/water/

should be interesting.

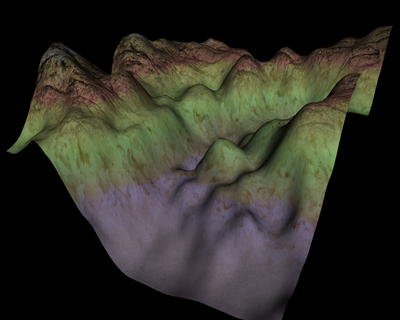

Effects may define and use their own uniform variables, including textures,

just like the standard shader nodes. So we can combine any number of textures

inside an effect. As an example we wrote a simple effect that mixes a couple of

textures based on a terrain height. See Figure 8.1, “ElevationGrid with 3 textures mixed (based on the point height) inside the shader”.

We could also pass any other uniform value to the effect, for example

passing the current time from an X3D TimeSensor allows to make

animated effects.

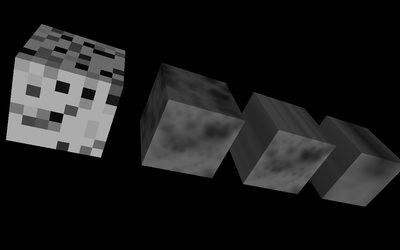

We can wrap 2D or 3D noise inside a ShaderTexture.

See Figure 8.2, “3D and 2D smooth noise on GPU, wrapped in a ShaderTexture”.

A texture node like NoiseTexture from InstantReality

[NoiseTexture]

may be implemented on GPU by a simple prototype using the ShaderTexture.

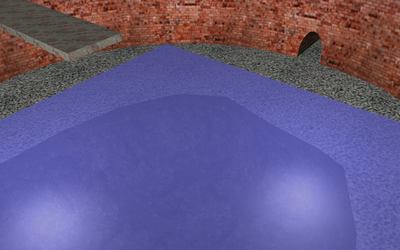

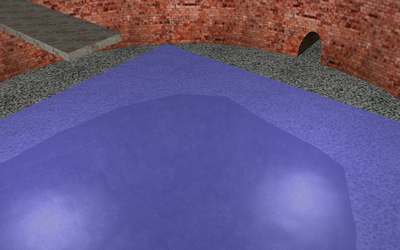

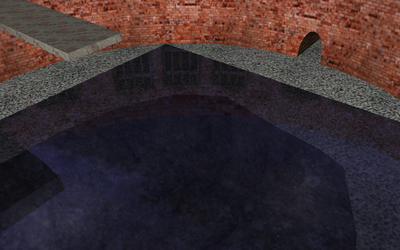

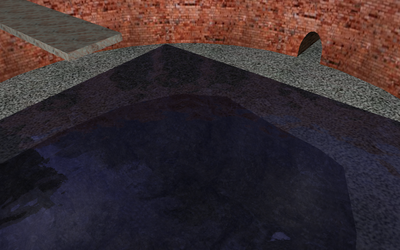

Water can be elegantly implemented using our effects, as a proper water simulation is naturally a combination of a couple effects. To simulate waves we want to vary vertex heights, or vary per-fragment normal vectors (for best results, we want to do both things). We also want to simulate the fact that water has reflections and is transparent. We have implemented a nice water using this approach, with (initially) two independent effect nodes. See Figure 8.3, “Water using our effects framework”.

We were also able to easily test

two alternative approaches for generating water normal vectors.

One approach was to take normals from the pre-recorded sequence of images

(encoded inside X3D MovieTexture,

with noise images generated by the Blender renderer).

The second approach was to calculate normals on the GPU from

a generated smooth 3D noise.

The implementation of these two approaches is contained in two separate

Effect nodes, and is concerned

only with calculating the normal vectors in the object space.

Yet another Effect node is responsible for

transforming these normal vectors into the eye space.

This way we have extracted all the common logic into a separate effect,

making it clear where the alternative versions differ and what

they have in common.

This was possible because one effect can define

new plug names, that can be used by the other effects.

As for the question “Which approach to generating water normals turned out to be better?” Predictably, we showed that using GPU noise is slower, requires a better GPU, but also improves the quality noticeably. With GPU noise, there is no problem with aliasing of the noise texture and the noise parameters can be adjusted in real-time.

Figure 8.3. Water using our effects framework

|

|

|

|

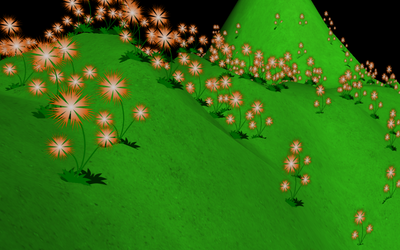

We also have plugs to change the geometry in object space. Since the effect is automatically integrated with all the browser shaders, you only need to code a simple function to change the vertex positions. The effect instantly works with all the lighting and texturing conditions. Since the transformation is done on GPU, there's practically no speed penalty for animating thousands of flowers in our test scene. See Figure 8.4, “Flowers bending under the wind, transformed on GPU in object space”.

We would like to emphasize that all the effects demonstrated here are theoretically already possible to implement using the standard X3D Programmable shaders component [X3D Shaders]. However, such implementation would be extremely cumbersome. You would first have to implement all the necessary multi-texturing, lighting, shadows, and other rendering features in a shader code. This is a large work if we consider all the X3D rendering options. Also note that a shader should remain optimized for a particular setting. The only manageable way to do this, that would work for all the lighting and texturing conditions, is to write a shader generator program. Which is actually exactly what our effects already do for you — the implementation of our effects constructs and links the appropriate shader code, gathering the information from all the nodes that affect the given shape. The information is nicely integrated with X3D, effects are specified at suitable nodes, and their uniform values and attributes are integrated with X3D fields.