(Outdated) Overview of the Castle Game Engine and VRML 1.0

Copyright © 2006, 2007, 2008, 2009, 2010, 2011, 2012, 2013, 2014, 2022 Michalis Kamburelis

You can redistribute and/or modify this document under the terms of the GNU General Public License as published by the Free Software Foundation; either version 2 of the License, or (at your option) any later version.

Table of Contents

- Goals

- 1. Overview of VRML

- 2. Scene Manager

- 3. Reading, writing, processing VRML scene graph

- 4. Octrees

- 5. Ray-tracer rendering

- 6. OpenGL rendering

- 7. Animation

- 8. Shadow Volumes

- 9. Links

List of Figures

- 1.1. VRML 1.0 sphere example

- 1.2. VRML 2.0 sphere example

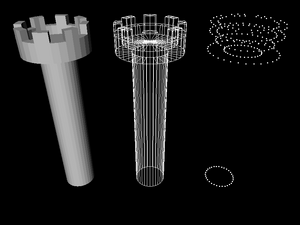

- 1.3. Cylinder example, rendered in wireframe mode (because it's unlit, non-wireframe rendering would look confusing)

- 1.4. VRML points example: yellow point at the bottom, blue point at the top

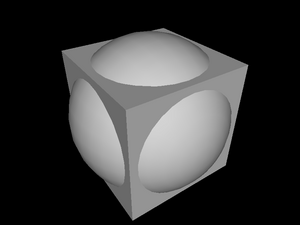

- 1.5. A cube and a sphere in VRML 1.0

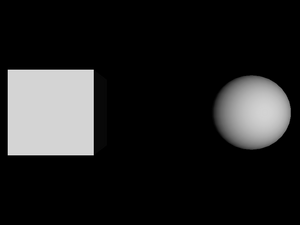

- 1.6. An unlit box and a sphere in VRML 2.0

- 1.7. A box and a translated sphere

- 1.8. A box, a translated sphere, and a translated and scaled sphere

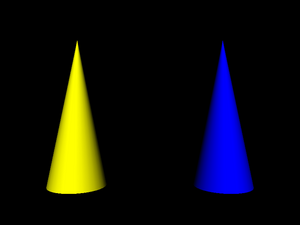

- 1.9. Two cones with different materials

- 1.10. A box and a translated sphere using the same texture

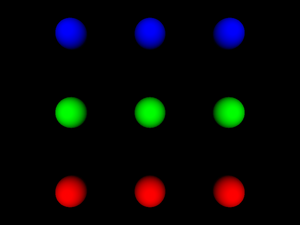

- 1.11. Three columns of three spheres

- 1.12. Faces, lines and point sets rendered using

the same

Coordinatenode - 1.13. Spheres with various material in VRML 1.0

- 1.14. An example how properties “leak out” from various grouping nodes in VRML 1.0

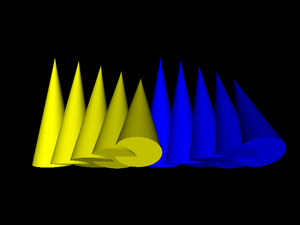

- 1.15. Our earlier example of reusing cone inlined a couple of times, each time with a slight translation and rotation

- 1.16. Textured cube with various texture transformations

- 1.17. Viewpoint defined for our previous example with multiplied cones

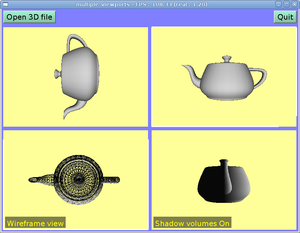

- 1.18. Three towers with various

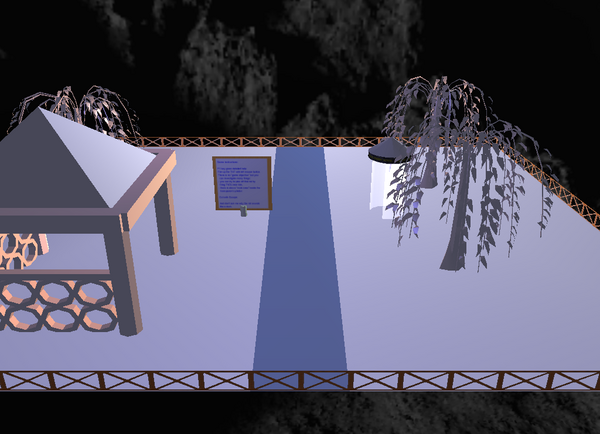

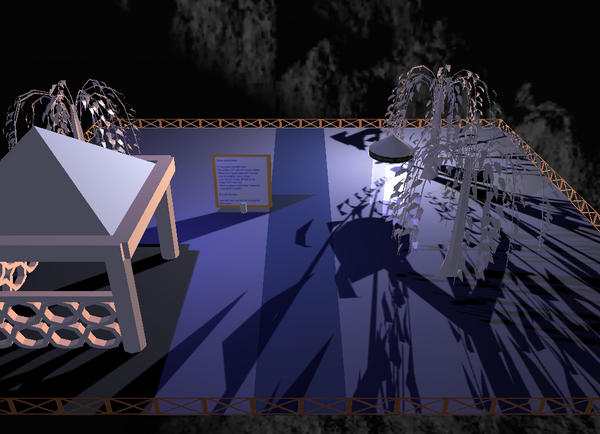

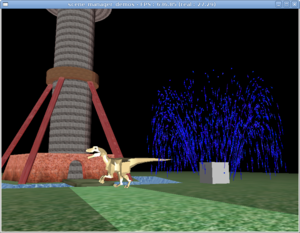

creaseAnglesettings - 2.1. Three 3D objects are rendered here: precalculated dinosaur animation, scripted (could be interactive) fountain animation, and static tower.

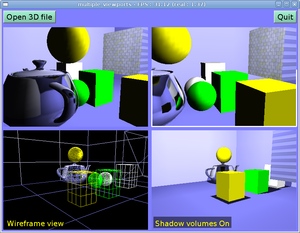

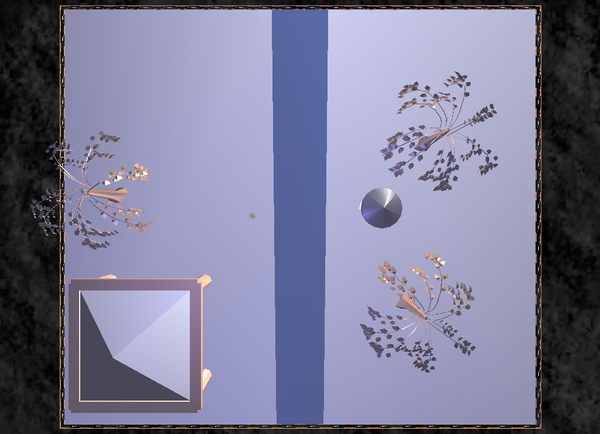

- 2.2. Simple scene, viewed from various viewports simultaneously.

- 2.3. Interactive scene, with shadows and mirors, viewed from various viewports.

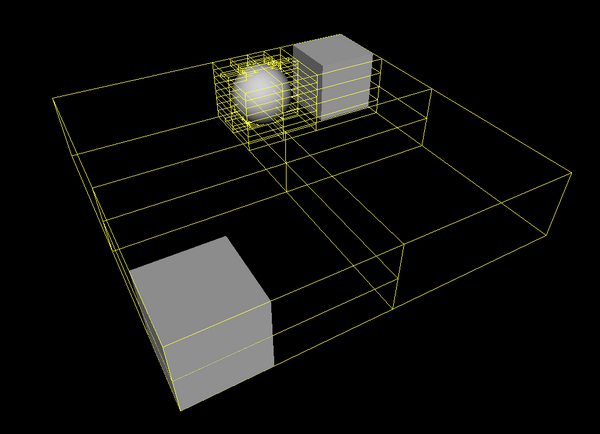

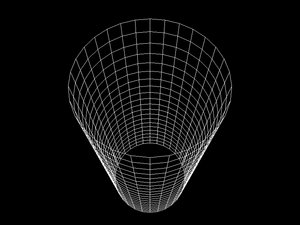

- 4.1. A sample octree constructed for a scene with two boxes and a sphere

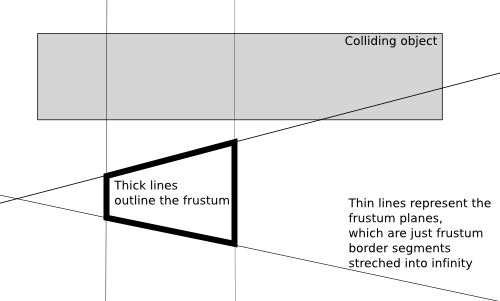

- 4.2. A nasty case when a box is considered to be colliding with a frustum, but in fact it's outside of the frustum

- 5.1. lets_take_a_walk scene, side view

- 5.2. lets_take_a_walk scene, top view

- 5.3. Generated ground texture

- 5.4. lets_take_a_walk scene, with ground texture. Side view

- 5.5. lets_take_a_walk scene, with ground texture. Top view.

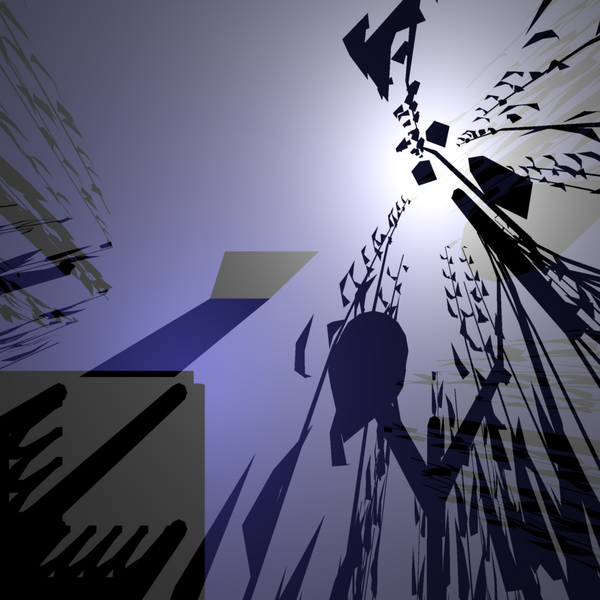

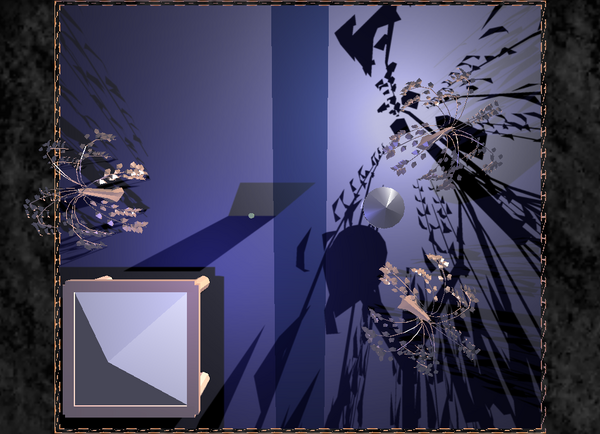

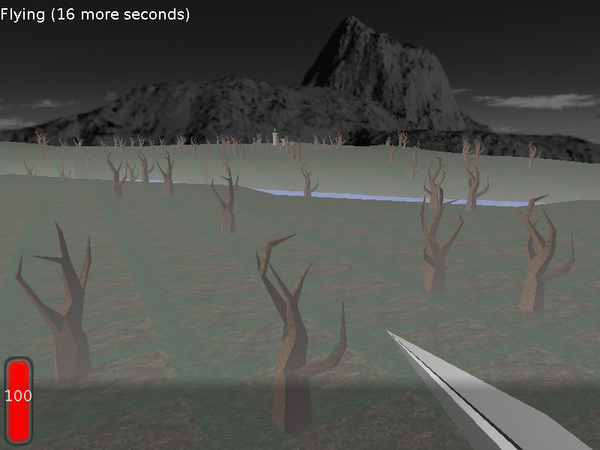

- 6.1. All the trees visible on this screenshot are actually the same tree model, only moved and rotated differently.

- 6.2. The correct rendering of the trees with volumetric fog

- 6.3. The wrong rendering of the trees with volumetric fog, if we would use the same arrays/VBO (containing fog coordinate for each vertex) for both trees.

- 6.4. Rendering without the fog (camera frustum culling is used)

- 6.5. Rendering with the fog (only objects within the fog visibility range need to be rendered)

- 6.6. The ghost creature on this screenshot is actually very close to the player. But it's transparent and is rendered incorrectly: gets covered by the ground and trees.

- 6.7. The transparent ghost rendered correctly: you can see that it's floating right before the player.

- 6.8. Material transparency with random stipples

- 6.9. Material transparency with regular stipples

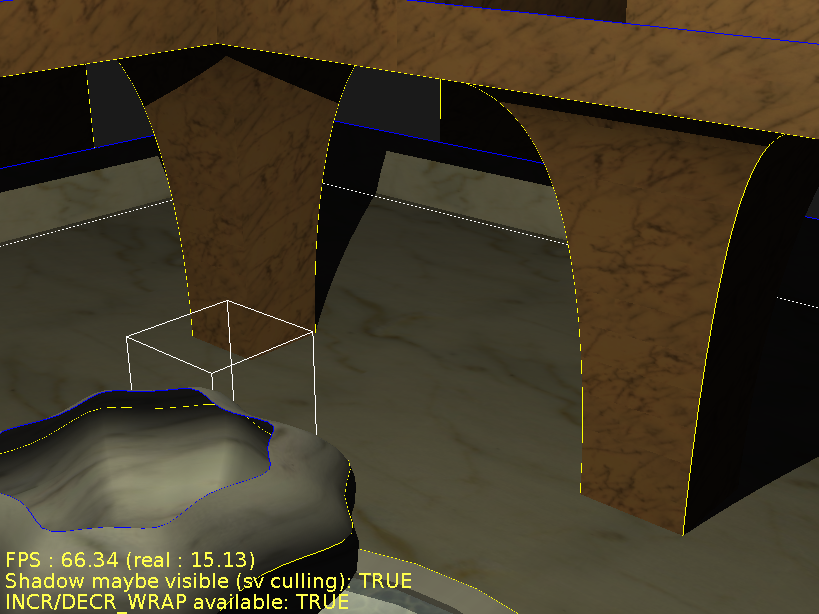

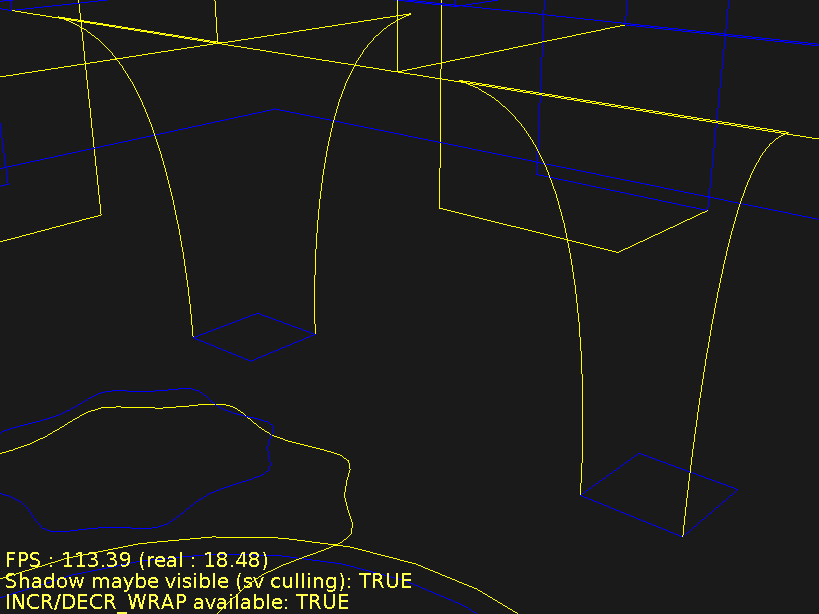

- 8.1. Fountain level, no shadows

- 8.2. Fountain level, shadows turned on

- 8.3. Fountain level, edges marked

- 8.4. Fountain level, only edges

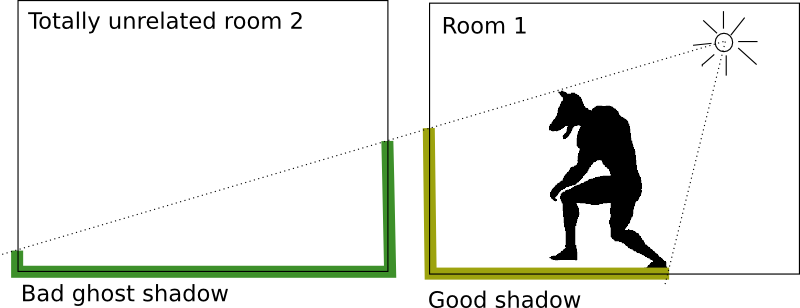

- 8.5. Ghost shadows

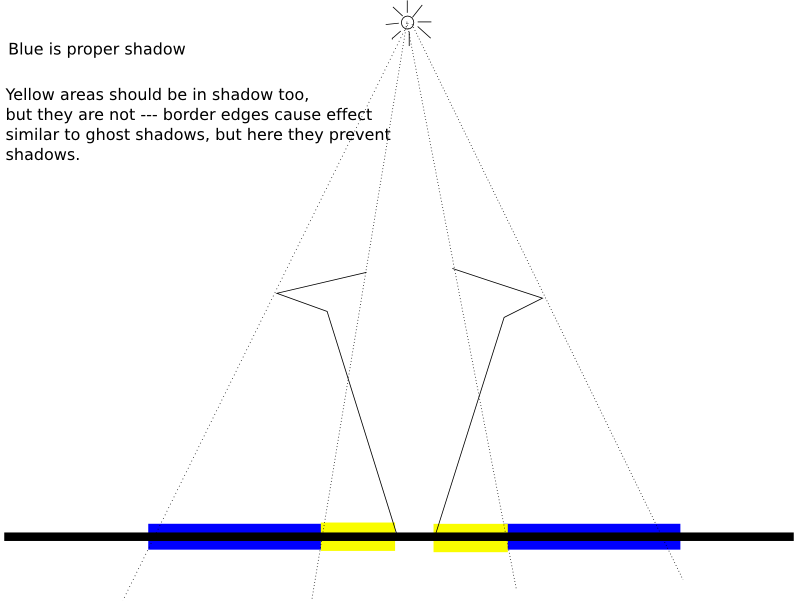

- 8.6. Lack of shadows, problem analogous to ghost shadows

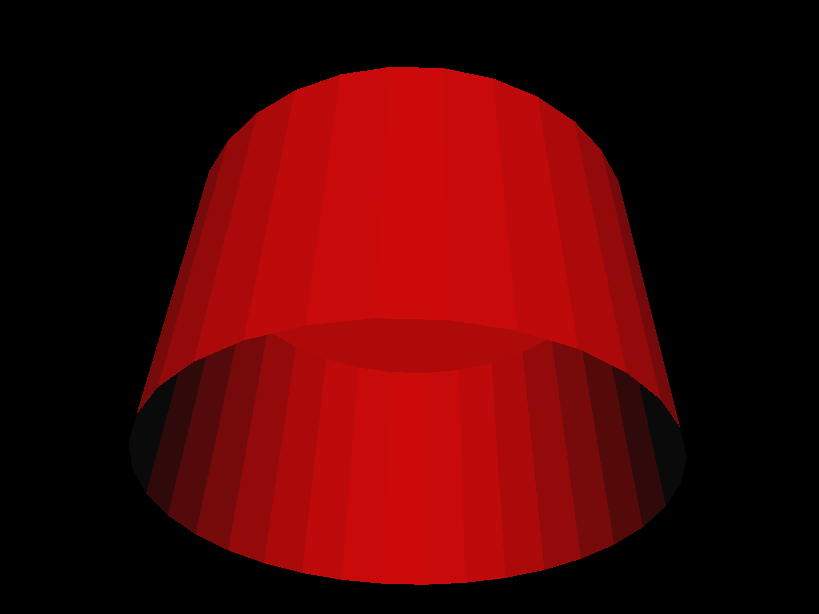

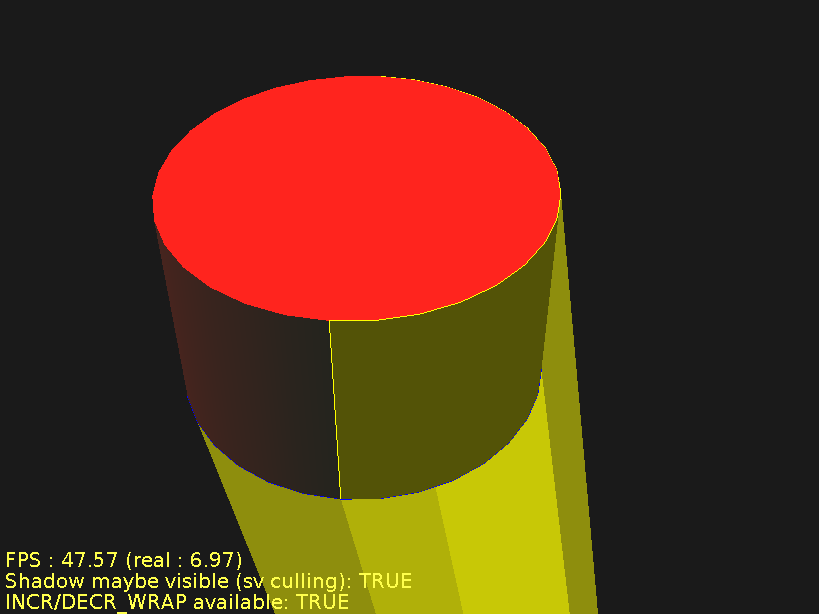

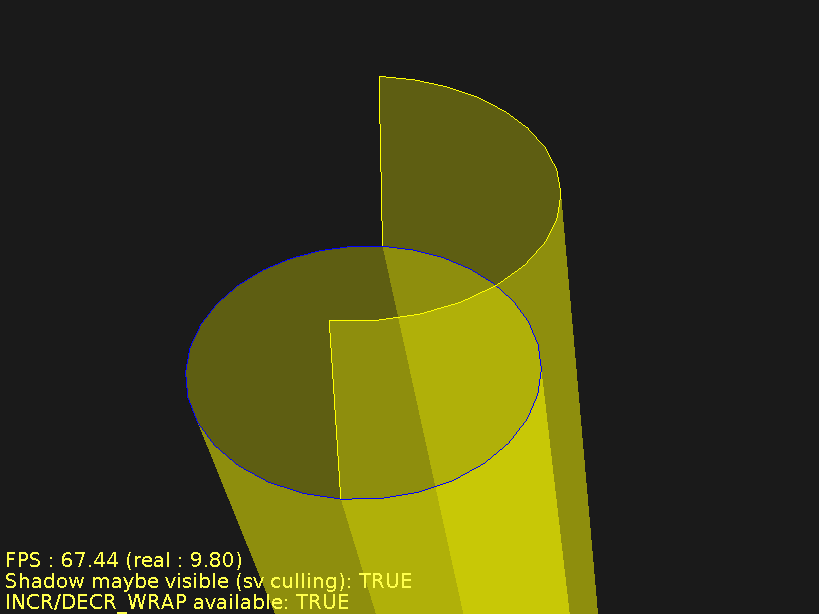

- 8.7. A cylinder capped at the top, open at the bottom

- 8.8. Cylinder open at the bottom with shadow quads

- 8.9. Cylinder open at the bottom with shadow edges

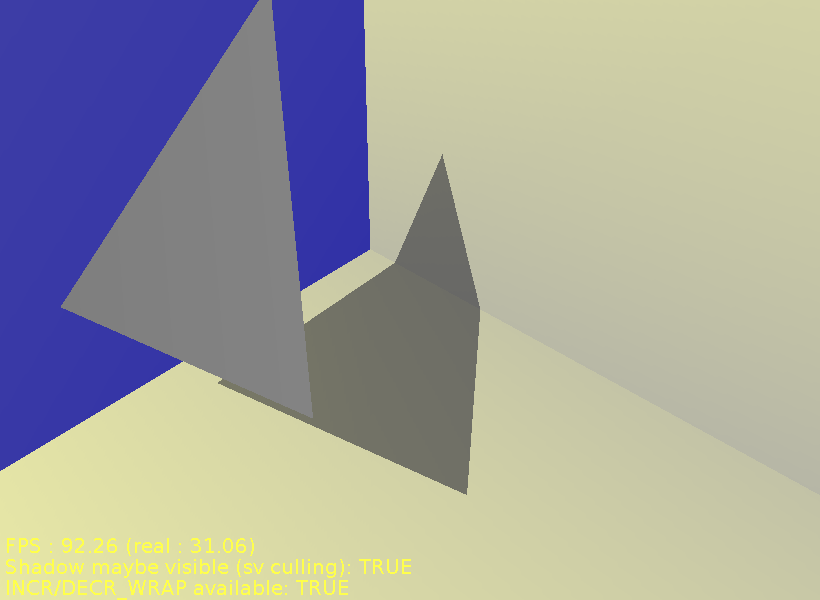

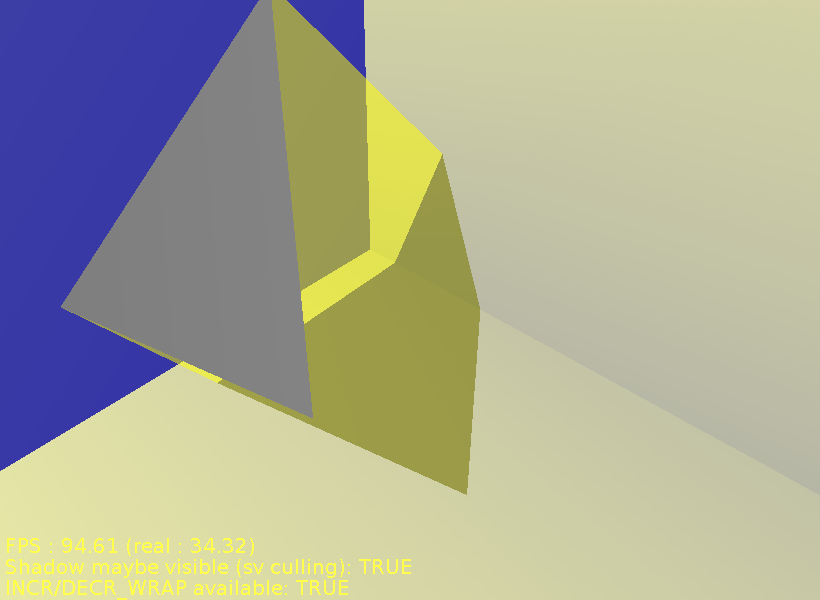

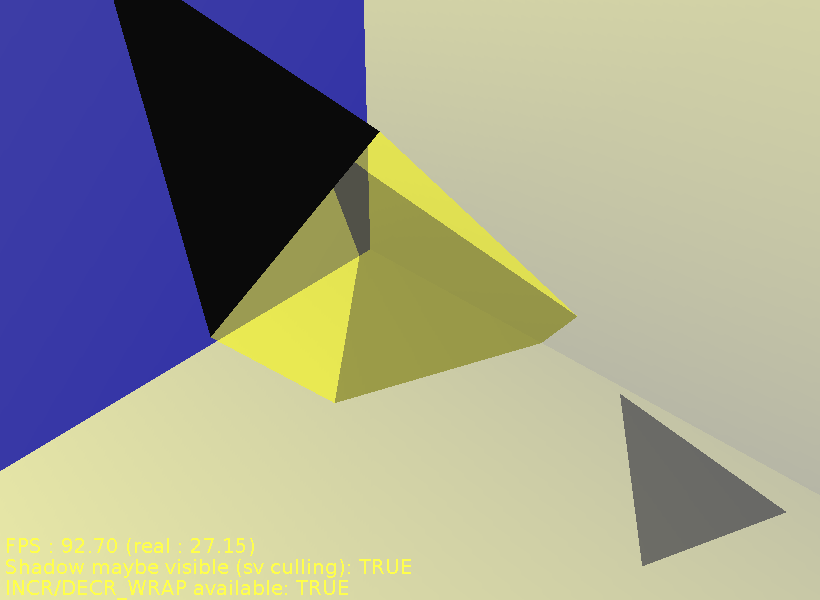

- 8.10. Good shadow from a single triangle

- 8.11. Good shadow from a single triangle, with shadow volumes drawn

- 8.12. Bad shadow from a single triangle

This document describes the implementation of a 3D engine based on the VRML and X3D languages.

The VRML language is used to define 3D worlds. X3D is simply VRML 3.0, also supported by our engine (since May 2008). We will have some introduction to the language in Chapter 1, Overview of VRML. VRML has many advantages over other 3D languages:

The specification of the language is open.

The language is implementation-neutral, which means that it's not “tied” to any particular rendering method or library. It's suitable for real-time rendering (e.g. using OpenGL or DirectX), it's also suitable for various software methods like ray-tracing. This neutrality includes the material and lighting model described in VRML 2.0 specification.

Inventor, an ancestor of the VRML, lacked such neutrality. Inventor was closely tied to the OpenGL rendering methods, including the OpenGL lighting model.

The language is quite popular and many 3D authoring programs can import and export models in this format. Some well-known open-source 3D modeling programs that can export to VRML are Blender and Art Of Illusion. White Dune is a modeller especially oriented towards VRML.

The language can describe geometry of 3D objects with all typical properties like materials, textures and normal vectors. More advanced features like multi-texturing, environment cube map texturing, shaders (in GLSL, NVidia Cg, HLSL) are also available in newest version (X3D).

The language is not limited to 3D objects. Other important environment properties, like lights, the sky, the fog, viewpoints, collision properties and many other can be expressed. Events mechanism allows to describe animations and user interactions with the scene.

The language is easy to extend. You can easily add your own nodes and fields (and I did, see the list of my VRML extensions).

Implementation goals were to make an engine that

Uses VRML / X3D. Some other 3D file formats are also supported (like 3DS, MD3, Wavefront OBJ and Collada) by silently converting them to VRML/X3D graph.

Allows to make a general-purpose VRML browser. See view3dscene.

Allows to make more specialized programs, that use the engine and VRML models as part of their job. For example, a game can use VRML models for various parts of the world:

Static environment parts (like the ground and the sky) can be stored and rendered as one VRML model.

Each creature, each item, each “dynamic” object of the world (door that can open, building that can explode etc.) can be stored and rendered as a separate VRML model.

When rendering, all these VRML objects can be rendered within the same frame, so that user sees the complete world with all objects.

Example game that uses my engine this way is “The Castle”.

Using the engine should be as easy as possible, but at the same time OpenGL rendering must be as fast as possible. This means that a programmer gets some control over how the engine will optimize given VRML model (or part of it). Different world parts may require entirely different optimization methods:

static parts of the scene,

parts of the scene that move (or rotate or scale etc.) only relatively to the static parts,

parts of the scene that frequently change inside (e.g. a texture changes or creature's arm rotates).

All details about optimization and animation methods will be given in later chapters (see Chapter 6, OpenGL rendering and Chapter 7, Animation).

The primary focus of the engine was always on 3D games, but, as described above, VRML models can be used and combined in various ways. This makes the engine suitable for various 3D simulation programs (oh, and various game types).

The engine is free open-source software (licensed on GNU General Public License).

Developed in object-oriented language. For me, the language of choice is ObjectPascal, as implemented in the Free Pascal compiler.

Table of Contents

This chapter is an overview of VRML concepts. It describes the language from the point of view of VRML author. It teaches how a simple VRML files look like and what are basic building blocks of every VRML file. It's intended to be a simple tutorial into VRML, not a complete documentation how to write VRML files. If you want to learn how to write non-trivial VRML files you should consult VRML specifications.

This chapter also describes main differences between VRML 1.0, 2.0 (also known as VRML 97) and 3.0 (more widely known as X3D). Our engine currently handles all these VRML versions. However, at the time of initial writing of this document, our engine supported only VRML 1.0 and basic 2.0, so more advanced and interesting VRML 2.0 and X3D concepts are only outlined at the end of this chapter — maybe this will be enhanced some day.

VRML files are normal text files, so they can be viewed and edited in any text editor. Here's a very simple VRML 1.0 file that defines a sphere:

#VRML V1.0 ascii

Sphere { }The first line is a header. It's purpose is to identify

VRML version and encoding used. Oversimplifying things a little,

every VRML 1.0 file will start with the exact same line:

#VRML V1.0 ascii.

After the header comes the actual content.

Like many programming languages, VRML language is a free-form

language, so the amount of whitespace in the file doesn't really matter.

In the example file above we see a declaration of a node

called Sphere. “Nodes” are the building

blocks of VRML: every VRML file specifies a directed graph of nodes.

After specifying the node name (Sphere),

we always put an opening brace (character {),

then we put a list of fields and

children nodes of our node,

and we end the node by a closing brace (character }).

In our simple example above, the Sphere

node has no fields specified and no children nodes.

The geometry defined by this VRML file is a sphere centered at the origin of coordinate system (i.e. point (0, 0, 0)) with a radius 1.0.

Why the sphere is centered at the origin?

Spheres produces by a

Spherenode are always centered at the origin — that's defined by VRML specifications. Don't worry, we can define spheres centered at any point, but to do this we have to use other nodes that will move ourSpherenode — more on this later.Why the sphere radius is 1.0?

This is the default radius of spheres produced by

Spherenode. We could change it by using theradiusfield of aSpherenode — more on this later.

Since the material was not specified, the sphere will use the default material properties. These make a light gray diffuse color (expressed as (0.8, 0.8, 0.8) in RGB) and a slight ambient color ((0.2, 0.2, 0.2) RGB).

An equivalent VRML 2.0 file looks like this:

#VRML V2.0 utf8

Shape {

geometry Sphere { }

}

As you can see, the header line is now different. It indicates VRML version as 2.0 and encoding as utf8 [1].

In VRML 2.0 we can't directly use a Sphere

node. Instead, we have to define a Shape node

and set it's geometry field to our desired

Sphere node. More on fields and children nodes

later.

Actually, our VRML 2.0 example is not equivalent to VRML 1.0 version: in VRML 2.0 version sphere is unlit (it will be rendered using a single white color). It's an example of a general decision in VRML 2.0 specification: the default behavior is the one that is easiest to render. If we want to make the sphere lit, we have to add a material to it — more on this later.

Every VRML node has a set of fields.

A field has a name, a type, and a default value. For example,

Sphere node has a field named radius,

of type SFFloat, that has a default value of 1.0.

There are many field types defined by VRML specification. Each field type specifies a syntax for field values in VRML file, and sometimes it specifies some interpretation of the field value. Example field types are:

SFFloat,SFDouble,SFTimeA float value. Syntax is identical to the syntax used in various programming languages, for example

3.1415926or12.5e-3.X3D added

SFDoubletype, which should be stored and processed with at least double precision.And there's the

SFTimefield type. It's syntax and internals are equivalent toSFDouble, but it has an added semantic: it specifies a time period or a point in time. In the latter case, this is the number of seconds passed since the Unix epoch (00:00:00 UTC on 1 January 1970). Although for single-player games, where time is not necessarily tied to the real-world time, sometimes other interpretations are useful, see my “VRML / X3D time origin considered uncomfortable” article.SFLong(in VRML 1.0),SFInt32(in VRML 2.0)A 32-bit integer value. As you can see, the name was changed in VRML 2.0 to indicate clearly the range of allowed values.

SFBoolA boolean value. Syntax: one word, either

FALSEorTRUE. Note that VRML is case-sensitive. In VRML 1.0 you could also write the number 0 (forFALSE) or 1 (forTRUE), but this additional syntax was removed from VRML 2.0 (since it's quite pointless).SFVec2f,SFVec3f,SFVec4fVector of 2, 3 or 4 floating point values. Syntax is to write them as a sequence of

SFFloatvalues, separated by whitespace. The specification doesn't say how these vectors are interpreted: they can be positions, they can be directions etc. The interpretation must be given for each case when some node includes a field of this type.The 4-component

SFVec4fwas added in X3D. X3D also added double-precision versions of these vectors:SFVec2d,SFVec3d,SFVec4d.SFColor,SFColorRGBA(X3D)Syntax of

SFColoris exactly likeSFVec3f, but this field has a special interpretation: it's an RGB (red, green, blue) color specification. Each component must be between 0.0 and 1.0. For example, this is a yellow color:1 1 0.X3D adds also 4-component type

SFColorRGBA, that adds alpha (opacity) value to the RGB color.SFRotationFour floating point values specifying rotation around an axis. First three values specify an axis, fourth value specifies the angle of rotation (in radians).

SFMatrix3f(X3D),SFMatrix3d(X3D),SFMatrix4f(X3D),SFMatrix4d(X3D),SFMatrix(VRML 1.0)3x3 and 4x4 matrix types, in single or double precision. Especially useful when transferring matrix data to GPU shaders.

VRML 1.0 had also a type named just

SFMatrix, this was equivalent to X3D'sSFMatrix4f.SFImageThis field type is used to specify image content for

PixelTexturenode in VRML 2.0 (Texture2node in VRML 1.0). This way you can specify texture content directly in VRML file, without the need to reference any external file. You can create grayscale, grayscale with alpha, RGB or RGB with alpha images this way. This is sometimes comfortable, when you must include everything in one VRML file, but beware that it makes VRML files very large (because the color values are specified in plain text, and they are not compressed in any way). See VRML specification for exact syntax of this field.An alternative, often better method to “inline” some file content inside VRML/X3D file is to use the data: URI. This allows you to inline file contents everywhere where normallny URI is accepted (for example, you can use normal

ImageTextureand it'surlfield), so it's more general solution. It's also more standard (not specific to VRML/X3D at all). And it allows to place compressed data (e.g. compressed PNG, JPG or any other file format, as specified by the mime type inside URI). Although compressed data will have to be encoded in base64, so it's not storage-optimal, but still it's usually much better thanSFImagenon-compressed format.The

data:URI is supported by most modern VRML/X3D browsers (including every program using our engine). So it's usually preferred over usingSFImage, for all but the tiniest images.SFStringA string, enclosed in double quotes. If you want to include double quote in a string, you have to precede it with the backslash (

\) character, and if you want to include the backslash in a string you have to write two backslashes. For example:"This is a string." "\"To be or not to be\" said the man." "Windows filename is c:\\3dmodels\\tree.wrl"Note that in VRML 2.0 this string can contain characters encoded in utf8 [2].

SFNodeThis is a special VRML 2.0 field type that contains other node as it's value (or a special value

NULL). More about this in Section 1.3, “Children nodes”.

All names of field types above start with SF,

which stands for “single-value field”. Most of these field types

have a counterpart, “multiple-value field”, with a name

starting with MF. For example MFFloat,

MFLong, MFInt32,

MFVec2f and MFVec3f.

The MF-field value is a sequence of any number

(possibly zero) of single field values. For example,

MFVec3f field specifies any number of 3-component

vectors and can be used to specify a set of 3D positions.

Syntax of multiple-value fields is:

An opening bracket (

[).A list of single field values separated by commas (in VRML 1.0) or whitespaces (in VRML 2.0). Note that in VRML 2.0 comma is also a whitespace, so if you write commas between values your syntax is valid in all VRML versions.

A closing bracket (

]). Note that you can omit both brackets if your MF-field has exactly one value.

Each node has a set of fields given by VRML specification. VRML file can specify value of some (maybe all, maybe none) node's fields. You can always leave the value of a field unspecified in VRML file, and it always is equivalent to explicitly specifying the default value for given field.

VRML syntax for specifying node fields is simple:

within node's braces ({ and })

place field's name followed by field's value.

Let's see some examples of specifying field values.

Sphere node has a field named

radius of type SFFloat

with a default value 1.0. So the file below is exactly

equivalent to our first sphere example in previous section:

#VRML V1.0 ascii

Sphere {

radius 1

}

And this is a sphere with radius 2.0:

#VRML V1.0 ascii

Sphere {

radius 2

}

Here's a VRML 2.0 file that specifies a cylinder that should be rendered

without bottom and top parts (thus creating a tube), with a

radius 2.0 and height 4.0. Three SFBool

fields of Cylinder are used:

bottom, side,

top (by default all are TRUE,

so actually we didn't have to write side TRUE).

And two SFFloat fields, radius

and height, are used.

Remember that in VRML 2.0 we can't just write the Cylinder

node. Instead we have to use the Shape node.

The Shape node has a field geometry

of type SFNode. By default, value of this field

is NULL, which means that no shape is actually defined.

We can place our Cylinder node as a value

of this field to correctly define a cylinder.

#VRML V2.0 utf8

Shape {

geometry Cylinder {

side TRUE

bottom FALSE

top FALSE

radius 2.0

height 10.0

}

}Figure 1.3. Cylinder example, rendered in wireframe mode (because it's unlit, non-wireframe rendering would look confusing)

Here's a VRML 2.0 file that specifies two points.

Just like in the previous example, we had to use a Shape node

and place PointSet node in it's geometry

field. PointSet node, in turn, has two more

SFNode fields: coord

(that can contain Coordinate node)

and color (that can contain Color node).

Coordinate node has a point field

of type MFVec3f — these are positions

of defined points. Color node has a

color field of type MFColor —

these are colors of points, specified in the same order as in

the Coordinate node.

Note that PointSet and Color

nodes have the same field name: color.

In the first case, this is an SFNode field,

in the second case it's an MFVec3f field.

#VRML V2.0 utf8

Shape {

geometry PointSet {

coord Coordinate { point [ 0 -2 0, 0 2 0 ] }

color Color { color [ 1 1 0, 0 0 1 ] }

}

}Now we're approaching the fundamental idea of VRML: some

nodes can be placed as a children of other nodes. We already

saw some examples of this idea in VRML 2.0 examples

above: we placed various nodes inside geometry

field of Shape node. VRML 1.0 has a little different

way of specifying children nodes (inherited from Inventor format)

than VRML 2.0 and X3D — we will see both methods.

In VRML 1.0, you just place children nodes inside the parent node. Like this:

#VRML V1.0 ascii

Group {

Sphere { }

Cube { width 1.5 height 1.5 depth 1.5 }

}Group is the simplest grouping node.

It has no fields, and it's only purpose is just to treat a couple of nodes

as one node.

Note that in VRML 1.0 it's required that a whole VRML file consists of exactly one root node, so we actually had to use some grouping node here. For example the following file is invalid according to VRML 1.0 specification:

#VRML V1.0 ascii

Sphere { }

Cube { width 1.5 height 1.5 depth 1.5 }

Nevertheless the above example is handled by many VRML engines, including our engine described in this document.

In VRML 2.0, you don't place children nodes directly

inside the parent node. Instead you place children nodes inside

fields of type SFNode (this contains

zero (NULL) or one node) or

MFNode (this contains any number (possibly zero)

of nodes). For example, in VRML 2.0 Group

node has an MFNode field children,

so the example file in VRML 2.0 equivalent to previous example looks like

this:

#VRML V2.0 utf8

Group {

children [

Shape { geometry Sphere { } }

Shape { geometry Box { size 1.5 1.5 1.5 } }

]

}Syntax of MFNode is just like for other multiple-valued

fields: a sequence of values, inside brackets ([

and ]).

Example above also shows a couple of other differences between VRML 1.0 and 2.0:

In VRML 2.0 we have to wrap

SphereandBoxnodes inside aShapenode.Node

Cubefrom VRML 1.0 was renamed toBoxin VRML 2.0.Size of the box in VRML 2.0 is specified using

sizefield of typeSFVec3f, while in VRML 1.0 we had three fields (width,height,depth) of typeSFFloat.

While we're talking about VRML versions differences, note also that

in VRML 2.0 a file can have any number of root nodes. So actually

we didn't have to use Group node in our example,

and the following would be correct VRML 2.0 file too:

#VRML V2.0 utf8

Shape { geometry Sphere { } }

Shape { geometry Box { size 1.5 1.5 1.5 } }

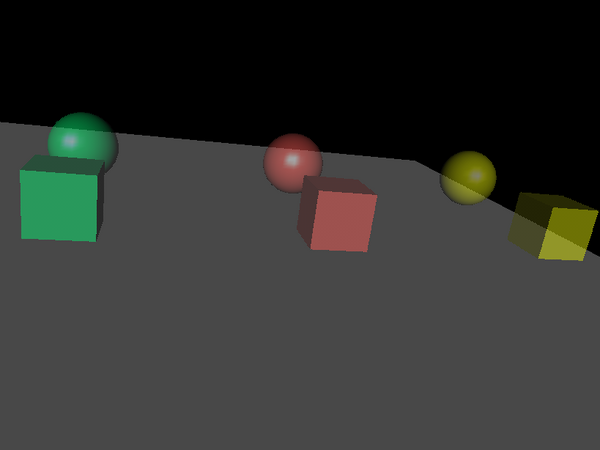

To be honest, we have to point one more VRML difference: as was mentioned before, in VRML 2.0 shapes are unlit by default. So our VRML 2.0 examples above look like this:

To make them lit, we must assign a material

for them. In VRML 2.0 you do this by placing a Material

node inside material field of Appearance

node. Then you place Appearance node inside

appearance field of

appropriate Shape node. Result looks like this:

#VRML V2.0 utf8

Group {

children [

Shape {

appearance Appearance { material Material { } }

geometry Sphere { }

}

Shape {

appearance Appearance { material Material { } }

geometry Box { size 1.5 1.5 1.5 }

}

]

}

We didn't specify any Material node's fields,

so the default properties will be used. Default VRML 2.0 material properties

are the same as for VRML 1.0: light gray diffuse color and a slight

ambient color.

As you can see, VRML 2.0 description gets significantly more verbose than VRML 1.0, but it has many advantages:

The way how children nodes are specified in VRML 2.0 requires you to always write an

SFNodeorMFNodefield name (as opposed to VRML 1.0 where you just write the children nodes). But the advantages are obvious: in VRML 2.0 you can explicitly assign different meaning to different children nodes by placing them within different fields. In VRML 1.0 all the children nodes had to be treated in the same manner — the only thing that differentiated children nodes was their position within the parent.As mentioned earlier, the default behavior of various VRML 2.0 parts is the one that is the easiest to render. That's why the default behavior is to render unlit, and you have to explicitly specify material to get lit objects.

This is a good thing, since it makes VRML authors more conscious about using features, and hopefully it will force them to create VRML worlds that are easier to render. In the case of rendering unlit objects, this is often perfectly acceptable (or even desired) solution if the object has a detailed texture applied.

Placing the

Materialnode inside theSFNodefield ofAppearance, and then placing theAppearancenode inside theSFNodefield ofShapemay seem like a “bondage-and-discipline language”, but it allows various future enhancements of the language without breaking compatibility. For example you could invent a node that allows to specify materials using a different properties (like by describing it's BRDF function, useful for rendering realistic images) and then just allow this node as a value for thematerialfield.Scenario described above actually happened. First versions of VRML 97 specification didn't include geospatial coordinates support, including a node

GeoCoordinate. A nodeIndexedFaceSethas a fieldcoordused to specify a set of points for geometry, and initially you could place aCoordinatenode there. When specification of geospatial coordinates support was formulated (and added to VRML 97 specification as optional for VRML browsers), all that had to be changed was to say that now you can placeGeoCoordinateeverywhere where earlier you could use onlyCoordinate.The

Shapenode in VRML 2.0 contains almost whole information needed to render given shape. This means that it's easier to create a VRML rendering engine. We will contrast this with VRML 1.0 approach that requires a lot of state information in Section 1.5, “VRML 1.0 state”.

Let's take a look at another grouping node:

VRML 2.0 Transform

node. This node specifies a transformation (a mix

of a translation, a rotation and a scale) for all it's children nodes.

The default field values are such that no transformation actually

takes place, because by default we translate by (0, 0, 0) vector,

rotate by zero angle and scale by 1.0 factor. This means that

the Transform node with all fields left as default

is actually equivalent to a Group node.

Example of a simple translation:

#VRML V2.0 utf8

Shape {

appearance Appearance { material Material { } }

geometry Box { }

}

Transform {

translation 5 0 0

children Shape {

appearance Appearance { material Material { } }

geometry Sphere { }

}

}Note that a child of a Transform node

may be another Transform node. All transformations

are accumulated. For example these two files are equivalent:

#VRML V2.0 utf8

Shape {

appearance Appearance { material Material { } }

geometry Box { }

}

Transform {

translation 5 0 0

children [

Shape {

appearance Appearance { material Material { } }

geometry Sphere { }

}

Transform {

translation 5 0 0

scale 1 3 1

children Shape {

appearance Appearance { material Material { } }

geometry Sphere { }

}

}

]

}#VRML V2.0 utf8

Shape {

appearance Appearance { material Material { } }

geometry Box { }

}

Transform {

translation 5 0 0

children Shape {

appearance Appearance { material Material { } }

geometry Sphere { }

}

}

Transform {

translation 10 0 0

scale 1 3 1

children Shape {

appearance Appearance { material Material { } }

geometry Sphere { }

}

}A

Switchnode allows you to choose only one (or none) from children nodes to be in the active (i.e. visible, participating in collision detection etc.) part of the scene. This is useful for various scripts and it's also useful for hiding nodes referenced later — we will see an example of this in Section 1.4, “DEF / USE mechanism”.A

Separatorand aTransformSeparatornodes in VRML 1.0. We will see what they do in Section 1.5, “VRML 1.0 state”.A

LODnode (the name is an acronym for level of detail) specifies a different versions of the same object. The intention is that all children nodes represent the same object, but with different level of detail: first node is the most detailed one (and difficult to render, check for collisions etc.), second one is less detailed, and so on, until the last node has the least details (it can even be empty, which can be expressed by aGroupnode with no children). VRML browser should choose the appropriate children to render based on the distance between the viewer and designated center point.A

Collisionnode is available in VRML 2.0 and X3D. It's very useful to disable collisions for particular shapes (visible but not collidable geometry), or to specify a “proxy” shape to be used for collisions. “Proxy” can be used to perform collisions with a complicated 3D object by a simpler shape, for example a complicated statue of a human could be surrounded by a simple box proxy for the sake of collisions. Also, this can be used to make collidable but invisible geometry.

VRML nodes may be named and later referenced. This allows

you to reuse the same node (which can be any VRML node type —

like a shape, a material, or even a whole group) more than once. The syntax

is simple: you name a node by writing

DEF <node-name> before node type.

To reuse the node, just write USE <node-name>.

This mechanism is available in all VRML versions.

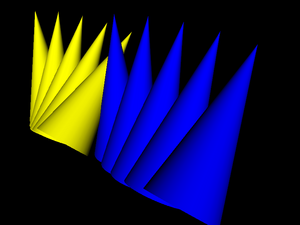

Here's a simple example

that uses the same Cone

twice, each time with a different material color.

#VRML V2.0 utf8

Shape {

appearance Appearance {

material Material { diffuseColor 1 1 0 }

}

geometry DEF NamedCone Cone { height 5 }

}

Transform {

translation 5 0 0

children Shape {

appearance Appearance {

material Material { diffuseColor 0 0 1 } }

geometry USE NamedCone

}

}Using DEF / USE mechanism makes your VRML files

smaller and easier to author, and it also allows

VRML implementations to save resources (memory, loading time...).

That's because VRML implementation can allocate the node

once, and then just copy the pointer to this node.

VRML specifications are formulated to make this

approach always correct, even when mixed with features like

scripting or sensors. Note that some nodes

can “pull” additional data with them

(for example ImageTexture nodes will load

texture image from file), so the memory saving may be even larger.

Consider these two VRML files:

#VRML V2.0 utf8

Shape {

appearance Appearance {

texture DEF SampleTexture

ImageTexture { url "../textures/test_texture.png" }

}

geometry Box { }

}

Transform {

translation 5 0 0

children Shape {

appearance Appearance {

texture USE SampleTexture

}

geometry Sphere { }

}

}#VRML V2.0 utf8

Shape {

appearance Appearance {

texture ImageTexture { url "../textures/test_texture.png" }

}

geometry Box { }

}

Transform {

translation 5 0 0

children Shape {

appearance Appearance {

texture ImageTexture { url "../textures/test_texture.png" }

}

geometry Sphere { }

}

}Both files above look the same when rendered, but in the first case VRML implementation loads the texture only once, since we know that this is the same texture node [3].

Note that the first node definition, with DEF

keyword, not only names the node, but also includes it in the file.

Often it's more comfortable to first define a couple of named

nodes (without actually using them) and then use them.

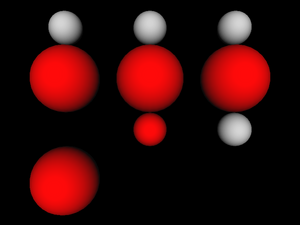

You can use the Switch node for this

— by default Switch node doesn't

include any of it's children nodes, so you can write

VRML file like this:

#VRML V2.0 utf8

Switch {

choice [

DEF RedSphere Shape {

appearance Appearance {

material Material { diffuseColor 1 0 0 } }

geometry Sphere { }

}

DEF GreenSphere Shape {

appearance Appearance {

material Material { diffuseColor 0 1 0 } }

geometry Sphere { }

}

DEF BlueSphere Shape {

appearance Appearance {

material Material { diffuseColor 0 0 1 } }

geometry Sphere { }

}

DEF SphereColumn Group {

children [

Transform { translation 0 -5 0 children USE RedSphere }

Transform { translation 0 0 0 children USE GreenSphere }

Transform { translation 0 5 0 children USE BlueSphere }

]

}

]

}

Transform { translation -5 0 0 children USE SphereColumn }

Transform { translation 0 0 0 children USE SphereColumn }

Transform { translation 5 0 0 children USE SphereColumn }

One last example shows a reuse of Coordinate

node. Remember that a couple of sections earlier we defined

a simple PointSet. PointSet

node has an SFNode field named coord.

You can place there a Coordinate node.

A Coordinate node, in turn, has a point

field of type SFVec3f that allows you to specify

point positions. The obvious question is “Why all this complexity?

Why not just say that coord field is

of SFVec3f type and directly include the point

positions?”. One answer was given earlier when talking

about grouping nodes: this allowed VRML specification for painless

addition of GeoCoordinate as an alternative

way to specify positions. Another answer is given by the example

below. As you can see, the same set of positions may be used

by a couple of different nodes[4].

#VRML V2.0 utf8

Shape {

appearance Appearance { material Material { } }

geometry IndexedFaceSet {

coord DEF TowerCoordinates Coordinate {

point [

4.157832 4.157833 -1.000000,

4.889094 3.266788 -1.000000,

......

]

}

coordIndex [

63 0 31 32 -1,

31 30 33 32 -1,

......

]

}

}

Transform {

translation 30 0 0

children Shape {

geometry IndexedLineSet {

coordIndex [

63 0 31 32 63 -1,

31 30 33 32 31 -1,

......

]

coord USE TowerCoordinates

}

}

}

Transform {

translation 60 0 0

children Shape {

geometry PointSet {

coord USE TowerCoordinates

}

}

}

Now that we know all about children relationships and DEF / USE mechanism, we can grasp the statement mentioned at the beginning of this chapter: every VRML file is a directed graph of nodes. It doesn't have cycles, although if we will forget about direction of edges (treat it as an undirected graph), we can get cycles (because of DEF / USE mechanism).

Note that VRML 1.0 file must contain exactly one root node,

while VRML 2.0 file is a sequence of any number of root nodes.

So, being precise, VRML graph doesn't have to be a connected graph.

But actually our engine when reading VRML file with many

root nodes just wraps them in an “invisible”

Group node. This special Group

node acts just like any other group node, but it's not written

back to the file (when e.g. using our engine to pretty-print VRML files).

This way, internally, we always see VRML file as a connected graph,

with exactly one root node.

In previous sections most of the examples were given only in VRML 2.0 version. Partially that's because VRML 2.0 is just newer and better, so you should use it instead of VRML 1.0 whenever possible. But partially that was because we avoided to explain one important behavior of VRML 1.0. In this section we'll fill the gap. Even if you're not interested in VRML 1.0 anymore, this information may help you understand why VRML 2.0 was designed the way it was, and why it's actually better than VRML 1.0. That's because part of the reasons of VRML 2.0 changes were to avoid the whole issue described here.

Historically, VRML 1.0 was based on Inventor file format,

and Inventor file format was

designed specifically with OpenGL implementation in mind.

Those of you who do any programming in OpenGL know that OpenGL

works as a state machine. This means

that OpenGL remembers a lot of “global” settings

[5]. When you want to render

a vertex (aka point) in OpenGL, you just call one simple command

(glVertex), passing only point coordinates.

And the vertex is rendered (along with a line or even a triangle

that it produces with other vertexes). What color does the vertex

has? The last color specified by glColor

call (or glMaterial, mixed with lights).

What texture coordinate does it have? Last texture coordinate

specified in glTexCoord call. What texture

does it use? Last texture bound with glBindTexture.

We can see a pattern here: when you want to know what property

our vertex has, you just have to check what value we last assigned

to this property. When we talk about OpenGL state, we talk

about all the “last glColor”,

“last glTexCoord” etc. values

that OpenGL has to remember.

Inventor, and then VRML 1.0, followed a similar approach.

“What material does a sphere use?” The one specified in the last

Material node. Take a look at the example:

#VRML V1.0 ascii

Group {

# Default material will be used here:

Sphere { }

DEF RedMaterial Material { diffuseColor 1 0 0 }

Transform { translation 5 0 0 }

# This uses the last material : red

Sphere { }

Transform { translation 5 0 0 }

# This still uses uses the red material

Sphere { }

Material { diffuseColor 0 0 1 }

Transform { translation 5 0 0 }

# Material changed to blue

Sphere { }

Transform { translation 5 0 0 }

# Still blue...

Sphere { }

USE RedMaterial

Transform { translation 5 0 0 }

# Red again !

Sphere { }

Transform { translation 5 0 0 }

# Still red.

Sphere { }

}Similar answers are given for other questions in the form “What is used?”. Let's compare VRML 1.0 and 2.0 answers for such questions:

What texture is used?

VRML 1.0 answer: Last

Texture2node.VRML 2.0 answer: Node specified in enclosing

Shapeappearance'stexturefield.What coordinates are used by

IndexedFaceSet?VRML 1.0 answer: Last

Coordinate3node.VRML 2.0 answer: Node specified in

coordfield of givenIndexedFaceSet.What font is used by by

AsciiTextnode (renamed to justTextin VRML 2.0)?VRML 1.0 answer: Last

FontStylenode.VRML 2.0 answer: Node specified in

fontStylefield of givenTextnode.

So VRML 1.0 approach maps easily to OpenGL.

Simple VRML implementation can just traverse the scene graph,

and for each node do appropriate set of OpenGL calls.

For example, Material node will correspond

to a couple of glMaterial and glColor

calls. Texture2 will correspond to binding

prepared OpenGL texture. Visible geometry nodes will cause

rendering of appropriate geometry, and so last

Material and Texture2

settings will be used.

In our example with materials above you can also

see another difference between VRML 1.0 and 2.0,

also influenced by the way things are done in OpenGL:

the way Transform node is used.

In VRML 2.0, Transform affected it's children.

In VRML 1.0, Transform node is not supposed to

have any children. Instead, it affects all subsequent nodes.

If we would like to translate last example to VRML 2.0,

each Transform node would have to be placed

as a last child of previous Transform node,

thus creating a deep nodes hierarchy. Alternatively, we could

keep the hierarchy shallow and just use

Transform { translation 5 0 0 ... }

for the first time, then Transform { translation 10 0 0 ... },

then Transform { translation 15 0 0 ... } and so on.

This means that simple VRML 1.0 implementation will just call

appropriate matrix transformations when processing Transform

node. In VRML 1.0 there are even more specialized

transformation nodes. For example a node Translation

that has a subset of features of full Transform node:

it can only translate. Such Translation

has an excellent, trivial mapping to OpenGL: just call

glTranslate.

There's one more important feature of OpenGL

“state machine” approach: stacks. OpenGL has a matrix

stack (actually, three matrix stacks for each matrix type) and

an attributes stack. As you can guess, there are nodes in VRML 1.0

that, when implemented in an easy way, map perfectly

to OpenGL push/pop stack operations: Separator

and TransformSeparator. When you use

Group node in VRML 1.0, the properties

(like last used Material and Texture2,

and also current transformation and texture transformation)

“leak” outside of Group node,

to all subsequent nodes.

But when you use Separator,

they do not leak out: all transformations and “who's the last

material/texture node” properties are unchanged

after we leave Separator node.

So simple Separator implementation in OpenGL

is trivial:

At the beginning, use

glPushAttrib(saving all OpenGL attributes that can be changed by VRML nodes) andglPushMatrix(for both modelview and texture matrices).Then process all children nodes of

Separator.Then restore state by

glPopAttribandglPopMatrixcalls.

TransformSeparator is a cross between

a Separator and a Group:

it saves only transformation matrix, and the rest of the state

can “leak out”. So to implement this in OpenGL,

you just call glPushMatrix (on modelview matrix)

before processing children and glPopMatrix

after.

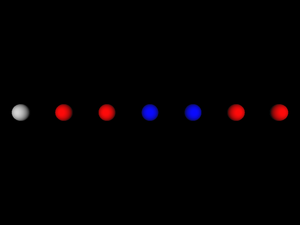

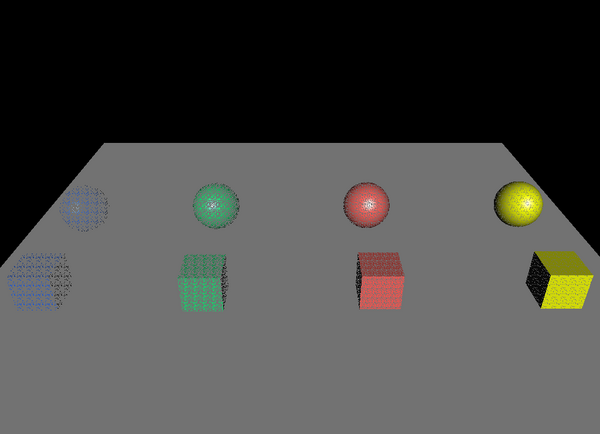

Below is an example how various VRML 1.0 grouping nodes allow

“leaking”. Each column starts with a standard

Sphere node. Then we enter some

grouping node (from the left: Group,

TransformSeparator and Separator).

Inside the grouping node we change material, apply scaling transformation

and put another Sphere node — middle

row always contains a red large sphere. Then we exit

from grouping node and put the third Sphere node.

How does this sphere look like depends on used grouping node.

#VRML V1.0 ascii

Separator {

Sphere { }

Transform { translation 0 -3 0 }

Group {

Material { diffuseColor 1 0 0 }

Transform { scaleFactor 2 2 2 }

Sphere { }

}

# A Group, so both Material change and scaling "leaks out"

Transform { translation 0 -3 0 }

Sphere { }

}

Transform { translation 5 0 0 }

Separator {

Sphere { }

Transform { translation 0 -3 0 }

TransformSeparator {

Material { diffuseColor 1 0 0 }

Transform { scaleFactor 2 2 2 }

Sphere { }

}

# A TransformSeparator, so only Material change "leaks out"

Transform { translation 0 -3 0 }

Sphere { }

}

Transform { translation 5 0 0 }

Separator {

Sphere { }

Transform { translation 0 -3 0 }

Separator {

Material { diffuseColor 1 0 0 }

Transform { scaleFactor 2 2 2 }

Sphere { }

}

# A Separator, so nothing "leaks out".

# The last sphere is identical to the first one.

Transform { translation 0 -3 0 }

Sphere { }

}There are some advantages of VRML 1.0 “state” approach:

It maps easily to OpenGL.

Such easy mapping may be also quite efficient. For example, if two nodes use the same

Materialnode, we can just change OpenGL material once (at the timeMaterialnode is processed). VRML 2.0 implementation must remember last setMaterialnode to achieve this purpose.It's flexible. The way transformations are specified in VRML 2.0 forces us often to create deeper node hierarchies than in VRML 1.0.

And in VRML 1.0 we can easier share materials, textures, font styles and other properties among a couple of nodes. In VRML 2.0 such reusing requires naming nodes by DEF / USE mechanism. In VRML 1.0 we can simply let a couple of nodes have the same node as their last

Material(or similar) node.

But there are also serious problems with VRML 1.0 approach, that VRML 2.0 solves.

The argumentation about “flexibility” of VRML 1.0 above looks similar to argumentation about various programming languages (...programming languages that should remain nameless here...), that are indeed flexible but also allow the programmer to “shoot himself in the foot”. It's easy to forget that you changed some material or texture, and accidentally affect more than you wanted.

Compare this with the luxury of VRML 2.0 author: whenever you start writing a

Shapenode, you always start with a clean state: if you don't specify a texture, shape will not be textured, if you don't specify a material, shape will be unlit, and so on. If you want to know how givenIndexedFaceSetwill look like when rendered, you just have to know it's enclosingShapenode. More precisely, the only things that you have to know for VRML 2.0 node to render it areenclosing

Shapenode,accumulated transformation from

Transformnodes,and some “global” properties: lights that affect this shape and fog properties. I call them “global” because usually they are applied to the whole scene or at least large part of it.

On the other hand, VRML 1.0 author or reader (human or program) must carefully analyze the code before given node, looking for last

Materialnode occurrence etc.The argumentation about “simple VRML 1.0 implementation” misses the point that such simple implementation will in fact suffer from a couple of problems. And fixing these problems will in fact force this implementation to switch to non-trivial methods. The problems include:

OpenGL stacks sizes are limited, so a simple implementation will limit allowed depth of

SeparatorandTransformSeparatornodes.If we will change OpenGL state each time we process a state-changing node, then we can waste a lot of time and resources if actually there are no shapes using given property. For example this code

Separator { Texture2 { filename "texture.png" } }will trick a naive implementation into loading from file and then loading to OpenGL context a completely useless texture data.

This seems like an irrelevant problem, but it will become a large problem as soon as we will try to use any technique that will have to render only parts of the scene. For example, implementing material transparency using OpenGL blending requires that first all non-transparent shapes are rendered. Also implementing culling of objects to a camera frustum will make many shapes in the scene ignored in some frames.

Last but not least: in VRML 1.0, grouping nodes must process their children in order, to collect appropriate state information needed to render each geometry. In VRML 2.0, there is no such requirement. For example, to render a

Groupnode in VRML 2.0, implementation can process and render children nodes in any order. Like said above, VRML 2.0 must only know about current transformation and global things like fog and lights. The rest of information needed is always contained within appropriateShapenode.VRML 2.0 implementation can even ignore some children in

Groupnode if it's known that they are not visible.Example situations when implementation should be able to freely choose which shapes (and in what order) are rendered were given above: implementing transparency using blending, and culling to camera frustum.

More about the way how we solved this problem for both VRML 1.0 and 2.0 in Section 3.10, “VRML scene”. More about OpenGL blending and culling to frustum in Section 6.4, “VRML scene class for OpenGL”.

Now that we're accustomed with VRML syntax and concepts, let's take a quick look at some notable VRML features that weren't shown yet.

A powerful tool of VRML is the ability to include one

model as a part of another. In VRML 2.0 we do this by

Inline node. It's url

field specifies the URL (possibly relative) of VRML file to load.

Note that our engine doesn't actually support URLs right now

and treats this just as a file name.

The content of referenced VRML file is placed at the position

of given Inline node. This means that you

can apply transformation to inlined content. This also

means that including the same file more than once is sensible

in some situations. But remember the remarks in Section 1.4, “DEF / USE mechanism”:

if you want to include the same file more than once, you should

name the Inline node and then just reuse it.

Such reuse will conserve resources.

url field is actually MFString

and is a sequence of URL values, from the most to least preferred one.

So VRML browser will try to load files from given URLs in order,

until a valid file will be found.

In VRML 1.0 the node is called WWWInline,

and the URL (only one is allowed, it's SFString

field) is specified in the field name.

When using our engine you can mix VRML/X3D versions and include VRML 1.0 file from VRML 2.0, or X3D, or the other way around. Moreover, you can include other 3D formats (like 3DS and Wavefront OBJ) too.

#VRML V2.0 utf8

DEF MyInline Inline { url "reuse_cone.wrl" }

Transform {

translation 1 0 0

rotation 1 0 0 -0.2

children [

USE MyInline

Transform {

translation 1 0 0

rotation 1 0 0 -0.2

children [

USE MyInline

Transform {

translation 1 0 0

rotation 1 0 0 -0.2

children [

USE MyInline

Transform {

translation 1 0 0

rotation 1 0 0 -0.2

children [

USE MyInline

] } ] } ] } ] }Figure 1.15. Our earlier example of reusing cone inlined a couple of times, each time with a slight translation and rotation

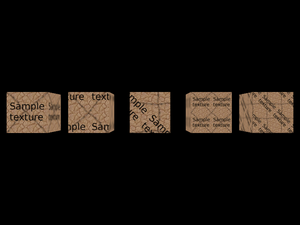

VRML allows you to specify a texture coordinate transformation. This allows you to translate, scale and rotate visible texture on given shape.

In VRML 1.0, you do this by Texture2Transform

node — this works analogous to Transform,

but transformations are only 2D. Texture transformations in VRML 1.0

accumulate, just like normal transformations. Here's an example:

#VRML V1.0 ascii

Group {

Texture2 { filename "../textures/test_texture.png" }

Cube { }

Transform { translation 3 0 0 }

Separator {

# translate texture

Texture2Transform { translation 0.5 0.5 }

Cube { }

}

Transform { translation 3 0 0 }

Separator {

# rotate texture by Pi/4

Texture2Transform { rotation 0.7853981634 }

Cube { }

}

Transform { translation 3 0 0 }

Separator {

# scale texture

Texture2Transform { scaleFactor 2 2 }

Cube { }

Transform { translation 3 0 0 }

# rotate texture by Pi/4.

# Texture transformation accumulates, so this will

# be both scaled and rotated.

Texture2Transform { rotation 0.7853981634 }

Cube { }

}

}Remember that we transform texture coordinates, so e.g. scale 2x means that the texture appears 2 times smaller.

VRML 2.0 proposes a different approach here:

We have similar TextureTransform node, but we can

use it only as a value for textureTransform field

of Appearance. This also means that there

is no way how texture transformations could accumulate.

Here's a VRML 2.0 file equivalent to previous VRML 1.0 example:

#VRML V2.0 utf8

Shape {

appearance Appearance {

texture DEF SampleTexture

ImageTexture { url "../textures/test_texture.png" }

}

geometry Box { }

}

Transform {

translation 3 0 0

children Shape {

appearance Appearance {

texture USE SampleTexture

# translate texture

textureTransform TextureTransform { translation 0.5 0.5 }

}

geometry Box { }

}

}

Transform {

translation 6 0 0

children Shape {

appearance Appearance {

texture USE SampleTexture

# rotate texture by Pi/4

textureTransform TextureTransform { rotation 0.7853981634 }

}

geometry Box { }

}

}

Transform {

translation 9 0 0

children Shape {

appearance Appearance {

texture USE SampleTexture

# scale texture

textureTransform TextureTransform { scale 2 2 }

}

geometry Box { }

}

}

Transform {

translation 12 0 0

children Shape {

appearance Appearance {

texture USE SampleTexture

# scale and rotate the texture.

# There's no way to accumulate texture transformations,

# so we just do both rotation and scaling by

# TextureTransform node below.

textureTransform TextureTransform {

rotation 0.7853981634

scale 2 2

}

}

geometry Box { }

}

}

You can specify various navigation information using

the NavigationInfo node.

typefield describes preferred navigation type. You can “EXAMINE” model, “WALK” in the model (with collision detection and gravity) and “FLY” (collision detection, but no gravity).avatarSizefield sets viewer (avatar) sizes. These typically have to be adjusted for each world to “feel right”. Although you should note that VRML generally suggests to treat length 1.0 in your world as “1 meter”. If you will design your VRML world following this assumption, then defaultavatarSizewill feel quite adequate, assuming that you want the viewer to have human size in your world. Viewer sizes are used for collision detection.Viewer size together with

visibilityLimitmay be also used to set VRML browsers Z-buffer near and far clipping planes. This is the case with our engine. By default our engine tries to calculate sensible values for near and far based on scene bounding box size.You can also specify moving speed (

speedfield), and whether head light is on (headlightfield).

To specify default viewer position and orientation in the

world you use Viewpoint node. In VRML 1.0,

instead of Viewpoint you have

PerspectiveCamera and

OrthogonalCamera (in VRML 2.0 viewpoint

is always perspective). Viewpoint and camera nodes may be generally

specified anywhere in the file. The first viewpoint/camera node

found in the file (but only in the active part of the file —

e.g. not in inactive children of Switch)

will be used as the starting position/orientation.

Note that viewpoint/camera nodes

are also affected by transformation.

Finally, note that my VRML viewer view3dscene has a useful function to print VRML viewpoint/camera nodes ready to be pasted to VRML file, see menu item “Console” -> “Print current camera node”.

Here's an example file. It defines a viewpoint (generated

by view3dscene) and a navigation info

and then includes actual world geometry from other file

(shown in our earlier example

about inlining).

#VRML V2.0 utf8

Viewpoint {

position 11.832 2.897 6.162

orientation -0.463 0.868 0.172 0.810

}

NavigationInfo {

avatarSize [ 0.5, 2 ]

speed 1.0

headlight TRUE

}

Inline { url "inline.wrl" }IndexedFaceSet nodes (and a couple of other

nodes in VRML 2.0 like ElevationGrid) have

some notable features to make their rendering better and

more efficient:

You can use non-convex faces if you set

convexfield toFALSE. It will be VRML browser's responsibility to correctly triangulate them. By default faces are assumed to be convex (following the general rule that the default behavior is the easiest one to handle by VRML browsers).By default shapes are assumed to be

solidwhich allows to use backface culling when rendering them.If you don't supply pre-generated normal vectors for your shapes, they will be calculated by the VRML browser. You can control how they will be calculated by the

creaseAnglefield: if the angle between adjacent faces will be less than specifiedcreaseAngle, the normal vectors in appropriate points will be smooth. This allows you to specify preferred “smoothness” of the shape. In VRML 2.0 by defaultcreaseAngleis zero (so all normals are flat; again this follows the rule that the default behavior is the easiest one for VRML browsers). See example below.For VRML 1.0 the

creaseAngle, backface culling and convex faces settings are controlled byShapeHintsnode.All VRML shapes have some sensible default texture mapping. This means that you don't have to specify texture coordinates if you want the texture mapped. You only have to specify some texture. For

IndexedFaceSetthe default texture mapping adjusts to shape's bounding box (see VRML specification for details).

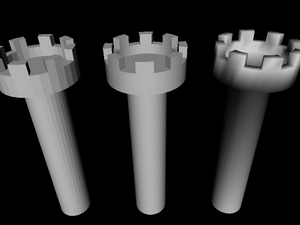

Here's an example of the creaseAngle

use. Three times we define the same geometry in IndexedFaceSet

node, each time using different creaseAngle values.

The left tower uses creaseAngle 0, so all

faces are rendered flat. Second tower uses creaseAngle 1

and it looks good — smooth where it should be.

The third tower uses creaseAngle 4,

which just means that normals are smoothed everywhere (this case

is actually optimized inside our engine, so it's calculated

faster) — it looks bad, we can see that normals are

smoothed where they shouldn't be.

#VRML V2.0 utf8

Viewpoint {

position 31.893 -69.771 89.662

orientation 0.999 0.022 -0.012 0.974

}

Transform {

children Shape {

appearance Appearance { material Material { } }

geometry IndexedFaceSet {

coord DEF TowerCoordinates Coordinate {

point [

4.157832 4.157833 -1.000000,

4.889094 3.266788 -1.000000,

......

]

}

coordIndex [

63 0 31 32 -1,

31 30 33 32 -1,

......

]

creaseAngle 0

}

}

}

Transform {

translation 30 0 0

children Shape {

appearance Appearance { material Material { } }

geometry IndexedFaceSet {

coordIndex [

63 0 31 32 -1,

31 30 33 32 -1,

......

]

coord USE TowerCoordinates

creaseAngle 1

}

}

}

Transform {

translation 60 0 0

children Shape {

appearance Appearance { material Material { } }

geometry IndexedFaceSet {

coordIndex [

63 0 31 32 -1,

31 30 33 32 -1,

......

]

coord USE TowerCoordinates

creaseAngle 4

}

}

}- Prototypes

These constructions define new VRML nodes in terms of already available ones. The idea is basically like macros, but it works on VRML nodes level (not on textual level, even not on VRML tokens level) so it's really safe.

- External prototypes

These constructions define syntax of new VRML nodes, without defining their implementation. The implementation can be specified in other VRML file (using normal prototypes mentioned above) or can be deduced by particular VRML browser using some browser-specific means (for example, a browser may just have some non-standard nodes built-in). If a browser doesn't know how to handle given node, it can at least correctly parse the node (and ignore it).

For example, many VRML browsers handle some non-standard VRML nodes. If you use these nodes and you want to make your VRML files at least readable by other VRML browsers, you should declare these non-standard nodes using external prototypes.

Even better, you can provide a list of proposed implementations for each external prototype. They are checked in order, VRML browser should chose the first implementation that it can use. So you can make the 1st item a URN that is recognized only by your VRML browser, and indicating built-in node implementation. And the 2nd item may point to a URL with another VRML file that at least partially emulates the functionality of this non-standard node, by using normal prototype. This way other VRML browsers will be able to at least partially make use of your node.

Our engine handles prototypes and external prototypes perfectly

(since around September 2007). We have some VRML/X3D extensions

(see

Castle Game Engine extensions list),

and they can be declared as external prototypes

with URN like

"urn:castle-engine.sourceforge.net:node:KambiOctreeProperties".

So other VRML browsers should be able to at least parse them.

X3D is a direct successor to VRML 2.0. X3D header even openly

specifies #X3D V3.0 utf8 (or 3.1,

or 3.2) admitting that it's just a 3rd version

of VRML.

X3D is almost absolutely compatible with VRML 2.0, meaning

that almost all VRML 2.0 files are also correct X3D files —

assuming that we change the header to indicate X3D and add trivial

PROFILE line.

Minor incompatible changes include renaming of access specifiers

(exposedField becomes inputOutput,

eventIn becomes inputOnly etc.),

and changes to some field names (Switch.choice and

LOD.level were renamed to Switch.children

and LOD.children, this made the “containerField” mechanism

of X3D XML encoding more useful). There was no revolutionary compatibility

break on the road to X3D, and everything that we said in this chapter

about VRML 2.0 applied also to X3D.

Some of the improvements of X3D:

- Encodings

VRML classic encoding is for compatibility with VRML 2.0.

XML encoding allows to validate and process X3D files with XML tools (like XML Schema, XSLT). It also allows easier implementation, since most programming languages include XML reading/writing support (usually using the DOM API). So you don't have to write lexer and parser (like for classic VRML).

Finally, binary encoding (not implemented in our engine yet) allows smaller files and makes parsing faster.

There is no requirement to support all three encodings in every X3D browser — you only have to support one. XML encoding is the most popular and probably the simpler to implement, so this is the suggested choice. All encodings are completely interchangeable, which means that we can convert X3D files back and forth from any encoding to any other, and no information is lost. Many tools exist to convert from one encoding to the other (our own engine can be used to convert between XML and classic encoding, see https://castle-engine.io/view3dscene.php#section_converting).

- Components and profiles

VRML 2.0 standard was already quite large, and implementing full VRML 2.0 browser was a difficult and long task. At the same time, pretty much everyone who used VRML for more advanced tasks wanted to extend it in some way. So it seemed that the standard was large, and it had to grow even larger... clearly, there was a problem.

The first part of the solution in X3D is to break the standard into many small components. Component is just a part of the specification dealing with particular functionality. The crucial part of each component are it's nodes, and some specification how these nodes cooperate with the rest of the scene. For example, there is a component with 2D geometry, called

Geometry2D. There is a component providing high-level shaders (GLSL, HLSL, Cg) support calledShaders. Currently (as of X3D edition 2) there are 34 components defined by the specification. Every node is part of some component. Naturally, some components depend on other components.Some components are complicated enough to be divided even more — into levels. For example, implementing component on lower level may mean that some node is only optionally supported, or maybe some of it's fields may be ignored, or maybe there may exist some limits on the data. For example, for the

Networkingcomponent, level 1 means that program must support only local (file://) absolute URLs. For level 2, additionallyhttp://must be supported, and URLs may be relative. On level 4 securehttps://must be additionally supported.The author of X3D file can request, at the beginning of X3D file, which components and on what levels must be supported to handle this file. For example, in classic VRML encoding lines

COMPONENT Networking:2 COMPONENT NURBS:1

mean that networking component must be support relative and and absolute

http://andfile://URLs and basic NURBS support is required.Now, the components and levels only divide the standard into small parts. It would be a nightmare to specify at the beginning of each file all required components. It would also do no good to compatibility across X3D browsers: if every browser would be allowed to support any set of any components, we would have no guarantee that even the most basic X3D file is supported by reasonable X3D browsers. So the second part of the solution are profiles. Profile is basically a set of components and their levels, and some additional conditions. There are only few profiles (six, as of X3D edition 2), like

Core,Interchange,InteractiveandFull. The idea is that when browser claims “I support Interchange profile”, then we already know quite a lot about what it supports (Interchange includes most of the static 3D data), and what it possibly doesn't support (interaction, like non-trivial sensors, is not included in the Interchange profile).Each X3D file must state at the beginning which profile it requires to operate. For example, in classic VRML encoding, the

PROFILEline is required, likePROFILE Interchange

Summing it up, the X3D author specifies first the profile and then optionally any number of components (and their levels) which must be supported (in addition to features already requested by the profile). Effectively, X3D browsers can support any components at any level, but they are also strongly pushed to support some high profile. X3D authors can request any profile and components combination they want, and are relatively safe to expect support from most browsers for Interchange or even Interactive profiles.

- New graphic features

As said, there are 34 X3D components, surely there are many new interesting nodes, far too many to actually list them here. You can take a quick look at the X3D specification table of contents at this point.

OK, some of the more interesting additions (not present in VRML 97 amendment 1), in my opinion: humanoid animation (H-Anim), programmable shaders, 3D texturing, cube map environmental texturing, rigid body physics, particle systems.

X3D is supported in our engine since May 2008.

One of the goals of VRML 97 was to allow creating animated and interactive 3D worlds. This feature really sets VRML above other 3D formats. We can define basic animations and interactions in pure VRML language, while also easy and natural integration with scripting languages is possible.

A couple of things make this working:

- Events

Each node has a set of events defined by the VRML standard[6]. There are input events, that can be send to the node (by routes and scripts, we will get to them soon). Input event provides some value to the node and tells the node to do something. There are also output events, that are conceptually generated “by the node”, when some situation occurs. Every event has a type, just like a VRML field. This type says what values can this event receive (input event) or send (output event). Specification says what events are available, and what do they actually do.

For example,

Viewpointnode has an inputset_bindevent ofSFBooltype. When you send aTRUEto this event, then the viewpoint becomes the current viewpoint, making camera jump to it. Thus, you can place manyViewpoints in VRML file, and switch user between them.As an example of output event, there is a

TimeSensornode that continuously sendstimeoutput event (ofSFTimetype). It sends current time value, in seconds (SFTimesimply contains double-precision floating point value).- Exposed fields

The most natural use for events is to set a field's value (by input event), and to generate notification when field's value changed (by output event). For example, we have an input event

set_translationforTransformnode, and analogoustranslation_changedevent. Together withtranslationfield, such triple is called an exposed field.A lot of fields are marked “exposed” in VRML standard. Analogous to above

Transform.translationexample, exposed fieldxxxis a normal field, plus an input event namedset_xxxthat sets field's value and generates output eventxxx_changed. This allows events mechanism to change the VRML graph at run-time.Some fields are not exposed (X3D calls them

initializeOnly), the idea is that VRML browser may need to do some time-consuming preparation to take this field into account, and it's not very common to change this value once VRML file is loaded. For example,creaseAngleofIndexedFaceSetis not an exposed field.- Routes

This is really the key idea, tying events mechanism together. A route connects one output event to some other input event. This means that when source output event is generated, the destination input event is fired. Destination event receives the value send by source event, naturally.

For example, consider

ProximitySensor, that sends a couple of output events when camera is within some defined box. In particular, it sendsposition_changedevent with current viewer position (asSFVec3fvalue). Let's say we want to make aCylinderthat hangs above camera, like a real cylinder hat. We can easily make a cylinder:DEF MyCylinder Transform { # We do not want to define translation field here, # it will be set by route children Transform { # This translation is to keep cylinder above the player # (otherwise player would be inside the cylinder) translation 0 2 0 children Shape { geometry Cylinder { } } } }How to make the cylinder move together with the player? We have to connect output event of

ProximitySensorwith input event ofMyCylinder:DEF MyProx ProximitySensor { } ROUTE MyProx.position_changed TO MyCylinder.set_translationAnd that's it! As you see, the crucial statement

ROUTEconnects two events (specifying their names, qualified by node names). What is important is that routes are completely independent from VRML file hierarchy, they can freely connect events between different nodes, no matter where in VRML hierarchy they are. Many routes may lead to a single input event, many routes may come out from a single output event. Loops are trivially possible by routes (VRML standard specifies how to avoid them: only one event is permitted to be send along one route during a single timestamp, this guarantees that any loop will be broken).- Sensor nodes

Exposed events and routes allow to propagate events. But how can we generate some initial event, to start processing? Sensor nodes answer this. We already saw examples of

TimeSensorandProximitySensor. There are many others, allowing events to be generated on object pick, mouse drag, key press, collisions etc. The idea is that VRML browser does the hard work of detecting situations when given sensor should be activated, and generates appropriate events from this sensor. Such events may be connected through routes to other events, thus causing the whole VRML graph to change because user e.g. clicked a mouse on some object.The beauty of this is that we can do many interesting things without writing anything that looks like an imperative programming language. We just declare nodes, connect their events with routes, and VRML browser takes care of handling everything.

- Interpolator nodes

These nodes allow to do animation by interpolation between a set of values. They all have a

set_fractioninput field, and upon receiving it they generate output eventvalue_changed. How the input fraction is translated to the output value is controlled by two fields:keyspecifies ranges of fraction values, andkeyValuespecifies corresponding output values. For example, here's a simple animation of sphere traveling along the square-shaped path:#VRML V2.0 utf8 DEF Timer TimeSensor { loop TRUE cycleInterval 5.0 } DEF Interp PositionInterpolator { key [ 0 0.25 0.5 0.75 1 ] keyValue [ 0 0 0 10 0 0 10 10 0 0 10 0 0 0 0 ] } DEF MySphere Transform { children Shape { geometry Sphere { } appearance Appearance { material Material { } } } } ROUTE Timer.fraction_changed TO Interp.set_fraction ROUTE Interp.value_changed TO MySphere.set_translation

Whole events mechanism is implemented in our engine since August 2008.

Scripting in VRML is very nicely defined on top of events and routes

mechanism.

The key VRML node here is the Script node. It's

url field specifies the script

— it's either an URL to the file containing actual script contents

(MIME type or eventually file extension will determine the script language),

or an inline script (starting with special protocol like

ecmascript: or castlescript:).

Moreover, you can define additional

fields and events within Script node.

Script node is special in this regard,

since most of the normal VRML nodes have a fixed set of fields and events.

Within Script, each node instance may have different

fields and events (some other VRML nodes use similar syntax,

like ComposedShader for uniform variables).

These “dynamic” fields/events are then treated as normal,

is particular you can connect them with other nodes' fields/events,

using normal VRML routes syntax.

For example:

DEF MyScript Script {

# Special fields/events for the script.

inputOnly SFTime touch_time

initializeOnly SFBool open FALSE

outputOnly SFTime close_time

outputOnly SFTime open_time

# Script contents --- in this case in CastleScript language,

# specified inline (script content is directly inside VRML file).

url "castlescript:

function touch_time(value, timestamp)

if (open,

close_time := timestamp,

open_time := timestamp);

open := not(open)

"

}

ROUTE SomeTouchSensor.touchTime TO MyScript.touch_time

ROUTE MyScript.close_time TO TimeSensor_CloseAnimation.startTime

ROUTE MyScript.open_time TO TimeSensor_OpenAnimation.startTime

The idea is that you can declare fields within script nodes using standard VRML syntax, and you route them to/from other nodes using standard VRML routes. The script contents say only what to do when input event is received, and may generate output events. This way the script may be treated like a “black box” by VRML browser: browser doesn't have to understand (parse, interpret etc.) the particular scripting language, and still it knows how this script is connected to the rest of VRML scene.

VRML 97 specification includes detailed description of Java and ECMAScript (JavaScript) bindings. X3D specification pushes this even further, by describing external language interface in a way that is “neutral” to actual programming language (which means that it should be applicable to pretty much all existing programming languages).

My engine doesn't support ECMAScript or Java scripting for now. But we have two usable script protocols:

compiled:protocol allows you to assign a compiled-in (that is, written in ObjectPascal and compiled in the program) handler to the script. See executing compiled-in code on Script events documentation.castlescript:protocol allows you to use a simple scripting language developed specifically for our engine. It allows you to receive, process and generate VRML events, being powerful enough for many scripting needs. Together with nodes likeKeySensorthis allows you to write full games/toys in pure VRML/X3D (without the need to compile anything). See https://castle-engine.io/castle_script.php for full documentation and many examples.

Scripts are implemented in our engine since October 2008.

Fun fact: this section of the documentation was initially called “Beyond what is implemented”. It was a list of various VRML 97 and X3D features not implemented yet in our engine. But with time, they were all gradually implemented, and the list of missing features got shorter and shorter... So now we list in this section many features that are implemented, but are documented elsewhere:

- NURBS

NURBS curves and surfaces. Along with interpolators to move other stuff along curves and surfaces. See NURBS.

- Environmental textures

Textures to simulate mirrors, auto-generated or loaded from files. See cube map texturing.

- Shaders

Full access to GPU shaders (OpenGL Shading Language). See shaders.

- Clicking and dragging sensors

Sensors to detect clicking and dragging with a mouse. Dragging sensors are particularly fun to allow user to visually edit the 3D world. See pointing device sensor.

- And much more...

See X3D / VRML for a complete and up-to-date list of all the X3D / VRML features supported in our engine. Including the standard X3D / VRML features and our extensions.

[1] VRML 2.0 files are always encoded using plain text in utf8. There was a plan to design other encodings, but it was never realized for VRML 2.0. VRML 2.0 files distributed on WWW are often compressed with gzip, we can say that it's a “poor-man's binary encoding”.

X3D (VRML 2.0 successor) filled the gap by specifying three encodings available: “classic VRML encoding” (this is exactly what VRML 2.0 uses), an XML encoding and a binary encoding. Our engine currently handles XML and classic X3D encoding.

[2] But also note that our engine doesn't support utf8

yet. In particular, when rendering Text node,

the string is treated as a sequence of 8-bit characters

in ISO-8859-1 encoding.