Programmable shaders component

This component defines nodes for using high-level shading languages available on modern graphic cards.

See also X3D specification of the Programmable shaders component.

|

|

Contents:

- Introduction

- Recommendation: Use shader effects (Effect, EffectPart nodes) instead of this

- Demos

- Support

- Features and examples

1. Introduction

The nodes documented here allow to write custom shader code using GLSL (OpenGL Shading Language).

There are many resources to learn GLSL:

-

OpenGL Shading Language at Wikipedia, includes links to Khronos specifications — this is your ultimate resource.

-

For a gentler introduction to GLSL, explore e.g. LearnOpenGL.com.

2. Recommendation: Use shader effects (Effect, EffectPart nodes) instead of this

WARNING: We do not recommend using nodes documented here (ComposedShader, ShaderPart). Reasons:

-

The nodes documented here require you to write the whole shader code. For example, if you just want to tweak one thing (e.g. shift colors hue), you still have to replicate all the lighting calculation (which is normally implemented by the engine).

-

To use the nodes documented here you need to know what uniforms are passed by the engine (uniforms are values passed from Pascal engine to the shader code).

Details about these "uniforms" are somewhat internal and may change from engine version to another (may even change depending on GPU capabilities, desktop vs mobile etc. -- for example, skinned animation will result in different set of uniforms depending on whether we do skinned animation on GPU).

Moreover, these uniforms are not portable between X3D implementations. E.g. in Castle Game Engine they are named

castle_xxx.

We strongly recommend using the shader effects instead, which mean using the Effect and EffectPart nodes. They overcome the above problems:

-

They allow you to write pieces of shader code that can be easily combined with the default rendering of the engine (and with other effects).

-

You simply define a function called

PLUG_xxx. This shader code will be merged with the browser shader code, and thePLUG_xxxcalled when necessary.To get the standard engine information, you should use the parameters provided to the

PLUG_xxxfunctions (and notcastle_xxxuniforms). Though you can still access your own information through own uniforms freely. -

They are implemented by Castle Game Engine and FreeWRL.

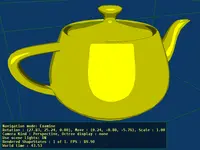

3. Demos

For complete demos of features discussed here,

see the shaders subdirectory inside our VRML/X3D demo models.

You can open them with various Castle Game Engine X3D tools,

in particular with Castle Model Viewer.

4. Support

ComposedShader (Pascal API: TComposedShaderNode) and

ShaderPart (Pascal API: TShaderPartNode) nodes

allow you to write shaders in the OpenGL shading language (GLSL).

These are standard X3D nodes to replace the default browser rendering with shaders.

5. Features and examples

5.1. Basic example

Examples below are in the classic (VRML) encoding.

To use shaders add inside the Appearance node code like

shaders ComposedShader { language "GLSL" parts [ ShaderPart { type "VERTEX" url "my_shader.vs" } ShaderPart { type "FRAGMENT" url "my_shader.fs" } ] }

The simplest vertex shader code to place inside my_shader.vs file

would be:

uniform mat4 castle_ModelViewMatrix; uniform mat4 castle_ProjectionMatrix; attribute vec4 castle_Vertex; void main(void) { gl_Position = castle_ProjectionMatrix * (castle_ModelViewMatrix * castle_Vertex); }

The simplest fragment shader code to place inside my_shader.fs file

would be:

void main(void) { gl_FragColor = vec4(1.0, 0.0, 0.0, 1.0); /* red */ }

5.2. Inline shader source code

You can directly write the shader source code inside an URL field

(instead of putting it in an external file).

The best way to do this, following the standards, is to use

the data URI.

In the simplest case, just start the URL with "data:text/plain,"

and then write your shader code.

A simplest example:

shaders ComposedShader { language "GLSL" parts [ ShaderPart { type "VERTEX" url "data:text/plain, uniform mat4 castle_ModelViewMatrix; uniform mat4 castle_ProjectionMatrix; attribute vec4 castle_Vertex; void main(void) { gl_Position = castle_ProjectionMatrix * (castle_ModelViewMatrix * castle_Vertex); }" } ShaderPart { type "FRAGMENT" url "data:text/plain, void main(void) { gl_FragColor = vec4(1.0, 0.0, 0.0, 1.0); /* red */ }" } ] }

Another example: shaders_inlined.x3dv.

In case of the X3D XML encoding, you can also place shader source code inside the CDATA.

As an extension (but compatible at least with

InstantPlayer)

we also recognize URL as containing direct shader source if it

has any newlines and doesn't start with any URL protocol.

But it's better to use "data:text/plain," mentioned above.

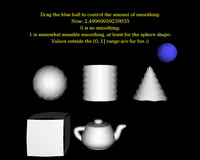

5.3. Passing values to GLSL shader uniform variables

You can set uniform variables for your shaders from VRML/X3D, just add lines like

inputOutput SFVec3f UniformVariableName 1 0 0

to your ComposedShader node. These uniforms may also be modified by

events (when they are inputOutput or inputOnly),

for example here's a simple way to pass the current time (in seconds)

to your shader:

# somewhere within Appearance: shaders DEF MyShader ComposedShader { language "GLSL" parts [ ShaderPart { type "VERTEX" url "my_shader.vs" } ShaderPart { type "FRAGMENT" url "my_shader.fs" } ] inputOnly SFTime time } # somewhere within grouping node (e.g. at the top-level of VRML/X3D file) add: DEF MyProximitySensor ProximitySensor { size 10000000 10000000 10000000 } DEF MyTimer TimeSensor { loop TRUE } ROUTE MyProximitySensor.enterTime TO MyTimer.startTime ROUTE MyTimer.elapsedTime TO MyShader.time

The ProximitySensor node above is useful to make

time start ticking from zero when you open VRML/X3D, which makes the float

values in MyTimer.elapsedTime increase from zero.

Which is usually useful, and avoids having precision problems

with huge values of MyTimer.time. See notes

about VRML / X3D time origin

for more details.

Most field types may be passed to appropriate GLSL uniform values. You can even set GLSL vectors and matrices. You can use VRML/X3D multiple-value fields to set GLSL array types. We support all mappings between VRML/X3D and GLSL types for uniform values (that are mentioned in X3D spec).

TODO: except the (rather obscure) SFImage and MFImage

types, that cannot be mapped to GLSL now.

Note: the SFColor is mapped to GLSL vec3,

and the MFColor is mapped to GLSL vec3[],

as these are RGB colors (without alpha information).

The X3D 3.x specification was saying to map them to vec4 / vec4[] in GLSL,

but it was simply an error corrected in X3D 4.0.

Use SFColorRGBA / MFColorRGBA to express RGBA colors,

that map to vec4 / vec4[] in GLSL.

5.4. Passing textures to GLSL shader uniform variables

You can also specify texture node (as SFNode field, or an array

of textures in MFNode field) as a uniform field value.

Engine will load and bind the texture and pass to GLSL uniform variable

bound texture unit. This means that you can pass in a natural way

texture node to a GLSL sampler2D, sampler3D,

samplerCube, sampler2DShadow and such.

The simplest demo of using this too combine 2 textures in a shader is

inside demo-models/shaders/two_textures.x3dv.

This demo, along with many more, are inside our

our VRML/X3D demo models

(look inside the shaders/ subdirectory).

When using GLSL shaders in X3D you should pass all

needed textures to them this way. Normal appearance.texture

is ignored when using shaders. However, in our engine,

we have a special case to allow you to specify textures also

in traditional appearance.texture field: namely,

when ComposedShader doesn't contain any texture nodes,

we will still bind appearance.texture. This e.g. allows

you to omit declaring texture nodes in ComposedShader

field if you only have one texture, it also allows renderer to

reuse OpenGL shader objects more (as you will be able to DEF/USE

in X3D ComposedShader nodes even when they use different

textures). But this feature should

not be used or depended upon in the long run.

Note that for now you have to pass textures in VRML/X3D fields

(initializeOnly or inputOutput).

TODO: Using inputOnly event to pass texture node to GLSL shader

temporarily does not work, just use initializeOnly or inputOutput instead.

5.5. Passing attributes to GLSL shader

You can also pass per-vertex attributes to your shader.

You can pass floats, vectors and matrices.

The way do use this of course follows X3D specification,

see FloatVertexAttribute (Pascal API: TFloatVertexAttributeNode),

Matrix3VertexAttribute (Pascal API: TMatrix3VertexAttributeNode),

Matrix4VertexAttribute (Pascal API: TMatrix4VertexAttributeNode) nodes.

You can place them in the attrib field of most geometry nodes

(like IndexedFaceSet).

Example attributes.x3dv, showing how to pass elevation grid heights by the shader attributes.

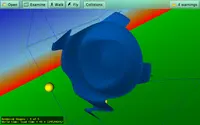

5.6. Geometry shaders

|

We support geometry shaders

(in addition to standard vertex and fragment shaders).

To use them, simply set ShaderPart.type to "GEOMETRY",

and put code of your geometry shader inside ShaderPart.url.

What is a geometry shader?

A geometry shader is executed once for each primitive, like once for each triangle.

Geometry shader works between the vertex shader and fragment shader

— it knows all the outputs from the vertex shader,

and is responsible for passing them to the rasterizer.

Geometry shader uses the information about given primitive: vertex positions

from vertex shader, usually in eye or object space,

and all vertex attributes.

A single geometry shader may generate any number of primitives

(separated by the EndPrimitive call), so you can easily "explode"

a simple input primitive into a number of others.

You can also delete some original primitives based on some criteria.

The type of the primitive may be changed by the geometry shader

— for example, triangles may be converted to points or the other way around.

Examples of geometry shaders with ComposedShader:

- Download a basic example X3D file with geometry shaders

- Another example of geometry shaders: geometry_shader_fun_smoothing.

We have also a more flexible approach to geometry shaders

as part of our compositing shaders

extensions. The most important advantage is that you can implement

only the geometry shader, and use the default vertex and fragment shader code

(that will do the boring stuff like texturing, lighting etc.).

Inside the geometry shader you have functions geometryVertexXxx

to pass-through or blend input vertexes in any way you like.

Everything is described in detail in our

compositing shaders documentation,

in particular see the the chapter "Extensions for geometry shaders".

Examples of geometry shaders with Effect:

5.6.1. Geometry shaders before GLSL 1.50 not supported

Our implementation of geometry shaders is directed only at geometry shaders as available in the OpenGL core 3.2 and later (GLSL version is 1.50 or later).

Earlier OpenGL and GLSL versions had geometry shaders

only available through extensions:

ARB_geometry_shader4

or EXT_geometry_shader4.

They had the same purpose, but the syntax and calls were different and incompatible.

For example, vertex positions were in gl_PositionIn instead of gl_in.

The most important incompatibility was that the input

and output primitive types, and the maximum number of vertices

generated, were specified outside of the shader source code.

To handle this, an X3D browser would have to do special OpenGL calls

(glProgramParameteriARB/EXT),

and these additional parameters must be placed inside the special

fields of the ComposedShader.

InstantReality

ComposedShader adds additional fields geometryInputType,

geometryOutputType, geometryVerticesOut specifically

for this purpose

(see also the bottom of InstantReality

shaders overview).

See simple example on Wikipedia of GLSL geometry shader differences before and after GLSL 1.50.

We have decided to not implement the old style geometry shaders.

The implementation would complicate the code

(need to handle new fields of the ComposedShader node),

and have little benefit (usable only for old OpenGL versions;

to make geometry shaders work with both old and new OpenGL versions,

authors would have to provide two separate versions of their geometry shaders).

So we just require new geometry shaders to conform to GLSL >= 1.50 syntax. On older GPUs, you will not be able to use geometry shaders at all.

5.6.2. Macro CASTLE_GEOMETRY_INPUT_SIZE

Unfortunately, ATI graphic cards have problems with geometry shader inputs. When the input array may be indexed by a variable (not a constant), it has to be declared with an explicit size. Otherwise you get shader compilation errors '[' : array must be redeclared with a size before being indexed with a variable. The input size actually depends on the input primitive, so in general you have to write:

in float my_variable[gl_in.length()];

Unfortunately, the above syntax does not work on NVidia,

that does not know that gl_in.length() is constant.

On the other hand, NVidia doesn't require input array declaration.

So you have to write:

in float my_variable[];

That's very cool, right? We know how to do it on ATI, but it doesn't work on NVidia. We know how to do it on NVidia, but it doesn't work on ATI. Welcome to the world of modern computer graphics :)

To enable you to write simple and robust geometry shaders,

our engine allows you to use a macro CASTLE_GEOMETRY_INPUT_SIZE

that expands to appropriate text (or nothing) for current GPU.

So you can just write:

in float my_variable[CASTLE_GEOMETRY_INPUT_SIZE];

5.7. Inspecting and customizing shaders generated by the engine

The engine can generate GLSL shaders

(as X3D ComposedShader node) to render your shapes.

This happens automatically under the hood in many situations (for example

when your shapes require bump mapping or shadow maps, or when you

compile for OpenGLES >= 2). You can run

Castle Model Viewer

with a command-line option --debug-log-shaders

and force rendering using shaders (for example by menu option

View -> Shaders -> Enable For Everything)

to view the generated GLSL code for your shaders in the console.

You can even copy them, as a ComposedShader node,

and adjust, if you like.

5.8. TODOs

TODO: activate event doesn't work to relink the GLSL

program now. (isSelected and isValid work perfectly for any

X3DShaderNode.)