Navigation component - extensions

Extensions introduced in Castle Game Engine related to navigation.

See also documentation of supported nodes of the Navigation component and X3D specification of the Navigation component.

Contents:

- DEPRECATED: Output events to generate camera matrix (

Viewpoint.camera*Matrixevents) - Force vertical field of view (

Viewpoint.fieldOfViewForceVertical) - Control head bobbing (

NavigationInfo.headBobbing*fields) - Customize headlight (

NavigationInfo.headlightNode) - DEPRECATED: Specify blending sort (

NavigationInfo.blendingSort) - DEPRECATED: Force VRML time origin to be 0.0 at load time (

NavigationInfo.timeOriginAtLoad) - DEPRECATED: Fields

directionandupandgravityUpforPerspectiveCamera,OrthographicCameraandViewpointnodes

1. DEPRECATED: Output events to generate camera matrix (Viewpoint.camera*Matrix events)

Deprecated: To convert between eye and world space in shaders (which was practically the only use-case of this) use shader effect library that defines GLSL functions like position_world_to_eye_space instead.

To every viewpoint node (this applies to all viewpoints usable

in our engine, including all X3DViewpointNode descendants,

like Viewpoint and OrthoViewpoint, and even to

VRML 1.0 PerspectiveCamera and OrthographicCamera)

we add output events that provide you with current camera matrix.

One use for such matrices is to route them to your GLSL shaders (as

uniform variables), and use inside the shaders to transform between

world and camera space.

*Viewpoint {

... all normal *Viewpoint fields ...

SFMatrix4f [out] cameraMatrix

SFMatrix4f [out] cameraInverseMatrix

SFMatrix3f [out] cameraRotationMatrix

SFMatrix3f [out] cameraRotationInverseMatrix

SFBool [in,out] cameraMatrixSendAlsoOnOffscreenRendering FALSE

}

"cameraMatrix" transforms from world-space (global 3D space

that we most often think within) to camera-space (aka eye-space;

when thinking within this space, you know then that the camera

position is at (0, 0, 0), looking along -Z, with up in +Y).

It takes care of both the camera position and orientation,

so it's 4x4 matrix.

"cameraInverseMatrix" is simply the inverse of this matrix,

so it transforms from camera-space back to world-space.

"cameraRotationMatrix" again

transforms from world-space to camera-space, but now it only takes

care of camera rotations, disregarding camera position. As such,

it fits within a 3x3 matrix (9 floats), so it's smaller than full

cameraMatrix (4x4, 16 floats).

"cameraRotationInverseMatrix" is simply it's inverse.

Ideal to transform directions

between world- and camera-space in shaders.

"cameraMatrixSendAlsoOnOffscreenRendering" controls

when the four output events above are generated.

The default (FALSE) behavior is that they are generated only

for camera that corresponds to the actual viewpoint, that is: for the

camera settings used when rendering scene to the screen.

The value TRUE causes the output matrix events to be generated

also for temporary camera settings used for off-screen rendering

(used when generating textures for GeneratedCubeMapTexture,

GeneratedShadowMap, RenderedTexture). This is a little

dirty, as cameras used for off-screen rendering do not (usually) have

any relation to actual viewpoint (for example, for

GeneratedCubeMapTexture, camera is positioned in the middle

of the shape using the cube map). But this can be useful: when you route

these events straight to the shaders, you usually need in shaders "actual

camera" (which is not necessarily current viewpoint camera) matrices.

These events are usually generated only by the currently bound viewpoint node.

The only exception is when you use RenderedTexture

and set something in RenderedTexture.viewpoint:

in this case, RenderedTexture.viewpoint will generate appropriate

events (as long as you set cameraMatrixSendAlsoOnOffscreenRendering

to TRUE). Conceptually, RenderedTexture.viewpoint

is temporarily bound (although it doesn't send isBound/bindTime events).

2. Force vertical field of view (Viewpoint.fieldOfViewForceVertical)

Viewpoint {

SFBool [in,out] fieldOfViewForceVertical FALSE

}

The standard Viewpoint.fieldOfView by default specifies

a minimum field of view. It will either be the horizontal field of view,

or vertical field of view — depending on the current aspect ratio

(whether your window is taller or wider). Usually, this smart behavior is useful.

However, sometimes you really need to explicitly specify a vertical

field of view. In this case, you can set fieldOfViewForceVertical

to TRUE. Now the Viewpoint.fieldOfView is interpreted

differently: it's always a vertical field of view.

The horizontal field of view will always be adjusted to follow aspect ratio.

3. Control head bobbing (NavigationInfo.headBobbing* fields)

"Head bobbing" is the effect of camera moving slightly up

and down when you walk on the ground (when gravity works).

This simulates our normal human vision — we can't usually keep

our head at the exact same height above the ground when walking

or running :)

By default our engine does head bobbing (remember, only when gravity

works; that is when the navigation mode is WALK).

This is common in FPS games.

Using the extensions below you can tune (or even turn off)

the head bobbing behavior. For this we add new fields to the

NavigationInfo node.

NavigationInfo {

...

SFFloat [in,out] headBobbing 0.02

SFFloat [in,out] headBobbingTime 0.5

}

Intuitively, headBobbing is the intensity of the whole effect

(0 = no head bobbing) and headBobbingTime determines

the time of a one step of a walking human.

The field headBobbing multiplied by the avatar height specifies how far

the camera can move up and down. The avatar height is taken from

the standard NavigationInfo.avatarSize (2nd array element).

Set this to exact 0 to disable head bobbing.

This must always be < 1. For sensible effects, this should

be something rather close to 0, like 0.02.

(See also TCastleWalkNavigation.HeadBobbing)

The field headBobbingTime determines how much time passes

to make full head bobbing sequence (camera swing up and then down back to original height).

(See also TCastleWalkNavigation.HeadBobbingTime)

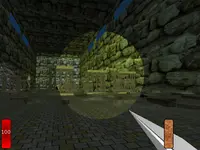

4. Customize headlight (NavigationInfo.headlightNode)

|

|

|

|

|

You can configure the appearance of headlight by the headlightNode

field of NavigationInfo node.

NavigationInfo {

...

SFNode [in,out] headlightNode NULL # [X3DLightNode]

}

headlightNode defines the type and properties of the

light following the avatar ("head light"). You can put any

valid X3D light node here. If you don't give anything here (but still

request the headlight by NavigationInfo.headlight = TRUE,

which is the default) then the default DirectionalLight

will be used for headlight.

Almost everything (with the exceptions listed below) works as usual for all the light sources. Changing colors and intensity obviously work. Changing the light type, including making it a spot light or a point light, also works.

Note that for nice spot headlights, you will usually want to enable per-pixel lighting on everything by View->Shaders->Enable For Everything. Otherwise the ugliness of default fixed-function Gouraud shading will be visible in case of spot lights (you will see how the spot shape "crawls" on the triangles, instead of staying in a nice circle). So to see the spot light cone perfectly, and also to see

SpotLight.beamWidthperfectly, enable per-pixel shader lighting.Note that instead of setting headlight to spot, you may also consider cheating: you can create a screen effect that simulates the headlight. See Castle Model Viewer "Screen Effects -> Headlight" for demo, and screen effects documentation for ways to create this yourself. This is an entirely different beast, more cheating but also potentially more efficient (for starters, you don't have to use per-pixel lighting on everything to make it nicely round).

Your specified

"location"of the light (if you put herePointLightorSpotLight) will be ignored. Instead we will synchronize light location in each frame to the player's location (in world coordinates).You can ROUTE your light's location to something, to see it changing.

Similarly, your specified

"direction"of the light (if this isDirectionalLightorSpotLight) will be ignored. Instead we will keep it synchronized with the player's normalized direction (in world coordinates). You can ROUTE this direction to see it changing.The

"global"field doesn't matter. Headlight always shines on everything, ignoring normal VRML/X3D light scope rules.

5. DEPRECATED: Specify blending sort (NavigationInfo.blendingSort)

Deprecated: Use TCastleViewport.BlendingSort to control this. See blending for more information about blending.

NavigationInfo {

...

SFString [in,out] blendingSort DEFAULT # ["DEFAULT", "NONE", "2D", "3D"]

}

Values other than "DEFAULT" force specific blending sort

treatment when rendering, which is useful since some scenes

sometimes have specific requirements to be rendered sensibly.

See TBlendingSort.

6. DEPRECATED: Force VRML time origin to be 0.0 at load time (NavigationInfo.timeOriginAtLoad)

By default, VRML/X3D time origin is at 00:00:00 GMT January 1, 1970

and SFTime reflects real-world time (taken from your OS).

This is uncomfortable for single-user games (albeit I admit it is great for multi-user worlds).

You can change this by using NavigationInfo node:

NavigationInfo {

...

SFBool [] timeOriginAtLoad FALSE

}

The default value, FALSE, means the standard VRML behavior.

When TRUE the time origin for this VRML scene is considered

to be 0.0 when browser loads the file. For example this means that you can

easily specify desired startTime values for time-dependent nodes

(like MovieTexture or TimeSensor)

to start playing at load time, or a determined number of seconds

after loading of the scene.

7. DEPRECATED: Fields direction and up and gravityUp for PerspectiveCamera, OrthographicCamera and Viewpoint nodes

Standard VRML way of specifying camera orientation

(look direction and up vector) is to use orientation field

that says how to rotate standard look direction vector (<0,0,-1>)

and standard up vector (<0,1,0>). While I agree that this

way of specifying camera orientation has some advantages

(e.g. we don't have the problem with the uncertainty

"Is look direction vector length meaningful ?")

I think that this is very uncomfortable for humans.

Reasoning:

- It's very difficult to write such

orientationfield by human, without some calculator. When you set up your camera, you're thinking about "In what direction it looks ?" and "Where is my head ?", i.e. you're thinking about look and up vectors. - Converting between

orientationand look and up vectors is trivial for computers but quite hard for humans without a calculator (especially if real-world values are involved, that usually don't look like "nice numbers"). Which means that when I look at source code of your VRML camera node and I see yourorientationfield — well, I still have no idea how your camera is oriented. I have to fire up some calculating program, or one of programs that view VRML (like Castle Model Viewer). This is not some terrible disadvantage, but still it matters for me. orientationis written with respect to standard look (<0,0,-1>) and up (<0,1,0>) vectors. So if I want to imagine camera orientation in my head — I have to remember these standard vectors.- 4th component of orientation is in radians, that are not nice for humans (when specified as floating point constants, like in VRMLs, as opposed to multiplies of π, like usually in mathematics). E.g. what's more obvious for you: "1.5707963268 radians" or "90 degrees" ? Again, these are equal for computer, but not readily equal for human (actually, "1.5707963268 radians" is not precisely equal to "90 degrees").

Also, VRML 2.0 spec says that the gravity upward vector should

be taken as +Y vector transformed by whatever transformation is applied

to Viewpoint node. This also causes similar problems,

since e.g. to have gravity upward vector in +Z you have to apply

rotation to your Viewpoint node.

So I decided to create new fields for PerspectiveCamera,

OrthographicCamera and Viewpoint

nodes to allow alternative way to specify

an orientation:

PerspectiveCamera / OrthographicCamera / Viewpoint {

... all normal *Viewpoint fields ...

MFVec3f [in,out] direction []

MFVec3f [in,out] up []

SFVec3f [in,out] gravityUp 0 1 0

}

If at least one vector in direction field

is specified, then this is taken as camera look vector.

Analogous, if at least one vector in up field

is specified, then this is taken as camera up vector.

This means that if you specify some vectors for

direction and up then the value of

orientation field is ignored.

direction and up fields should have

either none or exactly one element.

As usual, direction and up vectors

can't be parallel and can't be zero.

They don't have to be orthogonal — up vector will be

always silently corrected to be orthogonal to direction.

Lengths of these vectors are always ignored.

As for gravity: VRML 2.0 spec says to take standard +Y vector

and transform it by whatever transformation was applied to

Viewpoint node. So we modify this to say

take gravityUp vector

and transform it by whatever transformation was applied to

Viewpoint node. Since the default value for

gravityUp vector is just +Y, so things work 100% conforming

to VRML spec if you don't specify gravityUp field.

In Castle Model Viewer

"Print current camera node" command (key shortcut Ctrl+C)

writes camera node in both versions — one that uses

orientation field and transformations to get gravity upward vector,

and one that uses direction and up and gravityUp

fields.